merge upstream

@@ -74,7 +74,7 @@ Unlike proprietary AI platforms that dictate your roadmap, **Open WebUI puts you

|

||||

Security is a business-critical requirement. Open WebUI is built to support **SOC 2, HIPAA, GDPR, FedRAMP, and ISO 27001 compliance**, ensuring enterprise security best practices with **on-premise and air-gapped deployments**.

|

||||

|

||||

### ⚡ **Reliable, Scalable, and Performance-Optimized**

|

||||

Built for large-scale enterprise deployments with **multi-node high availability**, Open WebUI ensures **99.99% uptime**, optimized workloads, and **scalability across regions and business units**.

|

||||

Built for large-scale enterprise deployments with **multi-node high availability**, Open WebUI can be configured to ensure **99.99% uptime**, optimized workloads, and **scalability across regions and business units**.

|

||||

|

||||

### 💡 **Fully Customizable & Modular**

|

||||

Customize every aspect of Open WebUI to fit your enterprise’s needs. **White-label, extend, and integrate** seamlessly with **your existing systems**, including **LDAP, Active Directory, and custom AI models**.

|

||||

|

||||

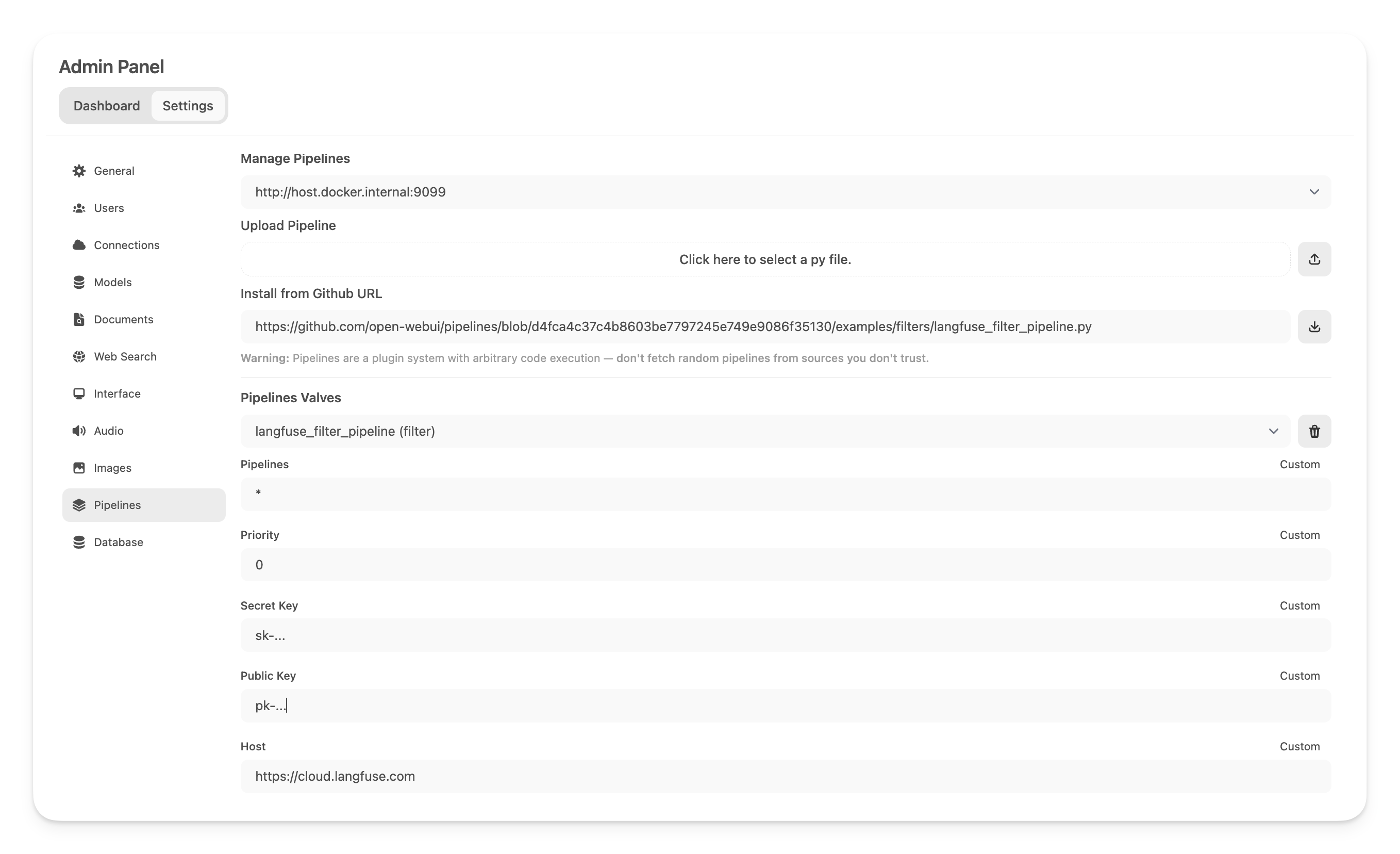

@@ -27,7 +27,12 @@ Integration Steps

|

||||

docker run -p 5001:5001 -e DOCLING_SERVE_ENABLE_UI=true quay.io/docling-project/docling-serve

|

||||

```

|

||||

|

||||

### Step 2: Configure OpenWebUI to use Docling

|

||||

*With GPU support:

|

||||

```bash

|

||||

docker run --gpus all -p 5001:5001 -e DOCLING_SERVE_ENABLE_UI=true quay.io/docling-project/docling-serve

|

||||

```

|

||||

|

||||

### Step 2: Configure Open WebUI to use Docling

|

||||

|

||||

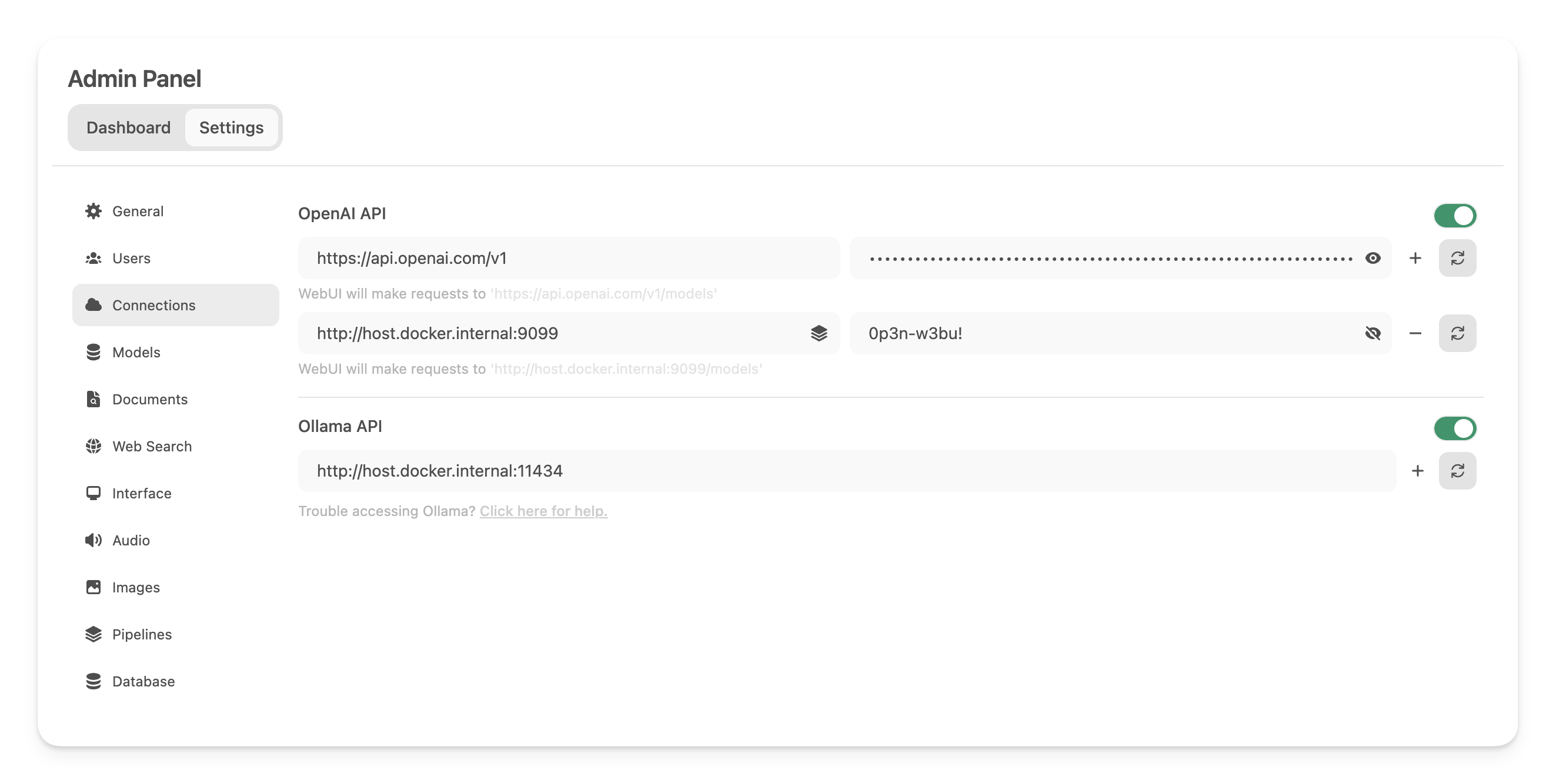

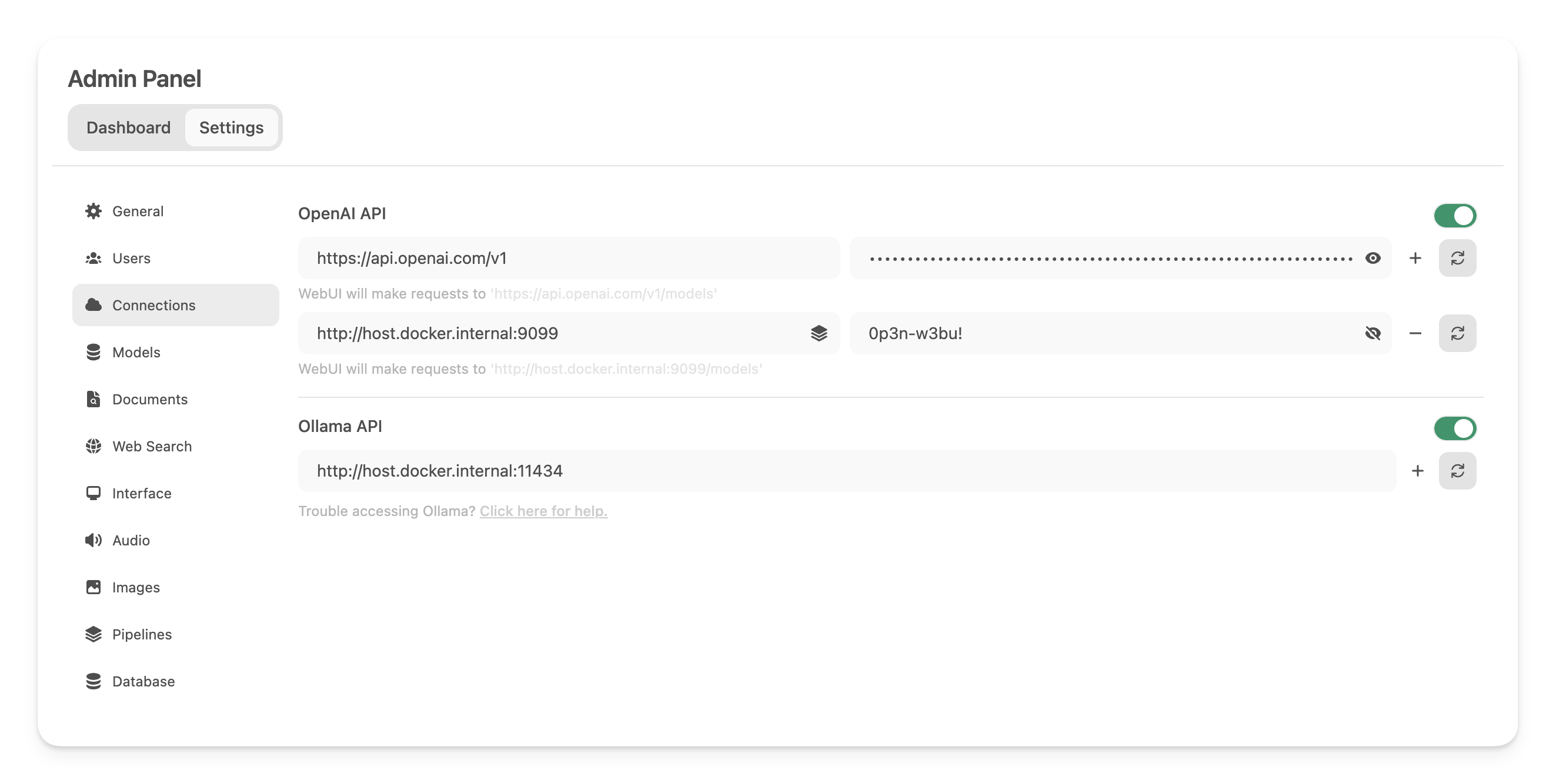

* Log in to your Open WebUI instance.

|

||||

* Navigate to the `Admin Panel` settings menu.

|

||||

|

||||

@@ -31,7 +31,7 @@ Integration Steps

|

||||

* Go to `API Keys` or `https://console.mistral.ai/api-keys`

|

||||

* Create a new key and make sure to copy it

|

||||

|

||||

### Step 3: Configure OpenWebUI to use Mistral OCR

|

||||

### Step 3: Configure Open WebUI to use Mistral OCR

|

||||

|

||||

* Log in to your Open WebUI instance.

|

||||

* Navigate to the `Admin Panel` settings menu.

|

||||

|

||||

336

docs/features/plugin/tools/development.mdx

Normal file

@@ -0,0 +1,336 @@

|

||||

---

|

||||

sidebar_position: 2

|

||||

title: "🛠️ Development"

|

||||

---

|

||||

|

||||

|

||||

|

||||

## Writing A Custom Toolkit

|

||||

|

||||

Toolkits are defined in a single Python file, with a top level docstring with metadata and a `Tools` class.

|

||||

|

||||

### Example Top-Level Docstring

|

||||

|

||||

```python

|

||||

"""

|

||||

title: String Inverse

|

||||

author: Your Name

|

||||

author_url: https://website.com

|

||||

git_url: https://github.com/username/string-reverse.git

|

||||

description: This tool calculates the inverse of a string

|

||||

required_open_webui_version: 0.4.0

|

||||

requirements: langchain-openai, langgraph, ollama, langchain_ollama

|

||||

version: 0.4.0

|

||||

licence: MIT

|

||||

"""

|

||||

```

|

||||

|

||||

### Tools Class

|

||||

|

||||

Tools have to be defined as methods within a class called `Tools`, with optional subclasses called `Valves` and `UserValves`, for example:

|

||||

|

||||

```python

|

||||

class Tools:

|

||||

def __init__(self):

|

||||

"""Initialize the Tool."""

|

||||

self.valves = self.Valves()

|

||||

|

||||

class Valves(BaseModel):

|

||||

api_key: str = Field("", description="Your API key here")

|

||||

|

||||

def reverse_string(self, string: str) -> str:

|

||||

"""

|

||||

Reverses the input string.

|

||||

:param string: The string to reverse

|

||||

"""

|

||||

# example usage of valves

|

||||

if self.valves.api_key != "42":

|

||||

return "Wrong API key"

|

||||

return string[::-1]

|

||||

```

|

||||

|

||||

### Type Hints

|

||||

Each tool must have type hints for arguments. As of version Open WebUI version 0.4.3, the types may also be nested, such as `queries_and_docs: list[tuple[str, int]]`. Those type hints are used to generate the JSON schema that is sent to the model. Tools without type hints will work with a lot less consistency.

|

||||

|

||||

### Valves and UserValves - (optional, but HIGHLY encouraged)

|

||||

|

||||

Valves and UserValves are used to allow users to provide dynamic details such as an API key or a configuration option. These will create a fillable field or a bool switch in the GUI menu for the given function.

|

||||

|

||||

Valves are configurable by admins alone and UserValves are configurable by any users.

|

||||

|

||||

<details>

|

||||

<summary>Commented example</summary>

|

||||

|

||||

```

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

class Tools:

|

||||

# Notice the current indentation: Valves and UserValves must be declared as

|

||||

# attributes of a Tools, Filter or Pipe class. Here we take the

|

||||

# example of a Tool.

|

||||

class Valves(BaseModel):

|

||||

# Valves and UserValves inherit from pydantic's BaseModel. This

|

||||

# enables complex use cases like model validators etc.

|

||||

test_valve: int = Field( # Notice the type hint: it is used to

|

||||

# choose the kind of UI element to show the user (buttons,

|

||||

# texts, etc).

|

||||

default=4,

|

||||

description="A valve controlling a numberical value"

|

||||

# required=False, # you can enforce fields using True

|

||||

)

|

||||

pass

|

||||

# Note that this 'pass' helps for parsing and is recommended.

|

||||

|

||||

# UserValves are defined the same way.

|

||||

class UserValves(BaseModel):

|

||||

test_user_valve: bool = Field(

|

||||

default=False, description="A user valve controlling a True/False (on/off) switch"

|

||||

)

|

||||

pass

|

||||

|

||||

def __init__(self):

|

||||

self.valves = self.Valves()

|

||||

# Because they are set by the admin, they are accessible directly

|

||||

# upon code execution.

|

||||

pass

|

||||

|

||||

# The __user__ handling is the same for Filters, Tools and Functions.

|

||||

def test_the_tool(self, message: str, __user__: dict):

|

||||

"""

|

||||

This is a test tool. If the user asks you to test the tools, put any

|

||||

string you want in the message argument.

|

||||

|

||||

:param message: Any string you want.

|

||||

:return: The same string as input.

|

||||

"""

|

||||

# Because UserValves are defined per user they are only available

|

||||

# on use.

|

||||

# Note that although __user__ is a dict, __user__["valves"] is a

|

||||

# UserValves object. Hence you can access values like that:

|

||||

test_user_valve = __user__["valves"].test_user_valve

|

||||

# Or:

|

||||

test_user_valve = dict(__user__["valves"])["test_user_valve"]

|

||||

# But this will return the default value instead of the actual value:

|

||||

# test_user_valve = __user__["valves"]["test_user_valve"] # Do not do that!

|

||||

|

||||

return message + f"\nThe user valve set value is: {test_user_valve}"

|

||||

|

||||

```

|

||||

</details>

|

||||

|

||||

### Optional Arguments

|

||||

Below is a list of optional arguments your tools can depend on:

|

||||

- `__event_emitter__`: Emit events (see following section)

|

||||

- `__event_call__`: Same as event emitter but can be used for user interactions

|

||||

- `__user__`: A dictionary with user information. It also contains the `UserValves` object in `__user__["valves"]`.

|

||||

- `__metadata__`: Dictionary with chat metadata

|

||||

- `__messages__`: List of previous messages

|

||||

- `__files__`: Attached files

|

||||

- `__model__`: Model name

|

||||

|

||||

Just add them as argument to any method of your Tool class just like `__user__` in the example above.

|

||||

|

||||

### Event Emitters

|

||||

Event Emitters are used to add additional information to the chat interface. Similarly to Filter Outlets, Event Emitters are capable of appending content to the chat. Unlike Filter Outlets, they are not capable of stripping information. Additionally, emitters can be activated at any stage during the Tool.

|

||||

|

||||

There are two different types of Event Emitters:

|

||||

|

||||

If the model seems to be unable to call the tool, make sure it is enabled (either via the Model page or via the `+` sign next to the chat input field). You can also turn the `Function Calling` argument of the `Advanced Params` section of the Model page from `Default` to `Native`.

|

||||

|

||||

#### Status

|

||||

This is used to add statuses to a message while it is performing steps. These can be done at any stage during the Tool. These statuses appear right above the message content. These are very useful for Tools that delay the LLM response or process large amounts of information. This allows you to inform users what is being processed in real-time.

|

||||

|

||||

```

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status", # We set the type here

|

||||

"data": {"description": "Message that shows up in the chat", "done": False, "hidden": False},

|

||||

# Note done is False here indicating we are still emitting statuses

|

||||

}

|

||||

)

|

||||

```

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

async def test_function(

|

||||

self, prompt: str, __user__: dict, __event_emitter__=None

|

||||

) -> str:

|

||||

"""

|

||||

This is a demo

|

||||

|

||||

:param test: this is a test parameter

|

||||

"""

|

||||

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status", # We set the type here

|

||||

"data": {"description": "Message that shows up in the chat", "done": False},

|

||||

# Note done is False here indicating we are still emitting statuses

|

||||

}

|

||||

)

|

||||

|

||||

# Do some other logic here

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status",

|

||||

"data": {"description": "Completed a task message", "done": True, "hidden": False},

|

||||

# Note done is True here indicating we are done emitting statuses

|

||||

# You can also set "hidden": True if you want to remove the status once the message is returned

|

||||

}

|

||||

)

|

||||

|

||||

except Exception as e:

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status",

|

||||

"data": {"description": f"An error occured: {e}", "done": True},

|

||||

}

|

||||

)

|

||||

|

||||

return f"Tell the user: {e}"

|

||||

```

|

||||

</details>

|

||||

|

||||

#### Message

|

||||

This type is used to append a message to the LLM at any stage in the Tool. This means that you can append messages, embed images, and even render web pages before, or after, or during the LLM response.

|

||||

|

||||

```

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "message", # We set the type here

|

||||

"data": {"content": "This message will be appended to the chat."},

|

||||

# Note that with message types we do NOT have to set a done condition

|

||||

}

|

||||

)

|

||||

```

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

async def test_function(

|

||||

self, prompt: str, __user__: dict, __event_emitter__=None

|

||||

) -> str:

|

||||

"""

|

||||

This is a demo

|

||||

|

||||

:param test: this is a test parameter

|

||||

"""

|

||||

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "message", # We set the type here

|

||||

"data": {"content": "This message will be appended to the chat."},

|

||||

# Note that with message types we do NOT have to set a done condition

|

||||

}

|

||||

)

|

||||

|

||||

except Exception as e:

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status",

|

||||

"data": {"description": f"An error occured: {e}", "done": True},

|

||||

}

|

||||

)

|

||||

|

||||

return f"Tell the user: {e}"

|

||||

```

|

||||

</details>

|

||||

|

||||

#### Citations

|

||||

This type is used to provide citations or references in the chat. You can utilize it to specify the content, the source, and any relevant metadata. Below is an example of how to emit a citation event:

|

||||

|

||||

```

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "citation",

|

||||

"data": {

|

||||

"document": [content],

|

||||

"metadata": [

|

||||

{

|

||||

"date_accessed": datetime.now().isoformat(),

|

||||

"source": title,

|

||||

}

|

||||

],

|

||||

"source": {"name": title, "url": url},

|

||||

},

|

||||

}

|

||||

)

|

||||

```

|

||||

If you are sending multiple citations, you can iterate over citations and call the emitter multiple times. When implementing custom citations, ensure that you set `self.citation = False` in your `Tools` class `__init__` method. Otherwise, the built-in citations will override the ones you have pushed in. For example:

|

||||

|

||||

```python

|

||||

def __init__(self):

|

||||

self.citation = False

|

||||

```

|

||||

|

||||

Warning: if you set `self.citation = True`, this will replace any custom citations you send with the automatically generated return citation. By disabling it, you can fully manage your own citation references.

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

class Tools:

|

||||

class UserValves(BaseModel):

|

||||

test: bool = Field(

|

||||

default=True, description="test"

|

||||

)

|

||||

|

||||

def __init__(self):

|

||||

self.citation = False

|

||||

|

||||

async def test_function(

|

||||

self, prompt: str, __user__: dict, __event_emitter__=None

|

||||

) -> str:

|

||||

"""

|

||||

This is a demo that just creates a citation

|

||||

|

||||

:param test: this is a test parameter

|

||||

"""

|

||||

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "citation",

|

||||

"data": {

|

||||

"document": ["This message will be appended to the chat as a citation when clicked into"],

|

||||

"metadata": [

|

||||

{

|

||||

"date_accessed": datetime.now().isoformat(),

|

||||

"source": title,

|

||||

}

|

||||

],

|

||||

"source": {"name": "Title of the content", "url": "http://link-to-citation"},

|

||||

},

|

||||

}

|

||||

)

|

||||

```

|

||||

</details>

|

||||

|

||||

## External packages

|

||||

|

||||

In the Tools definition metadata you can specify custom packages. When you click `Save` the line will be parsed and `pip install` will be run on all requirements at once.

|

||||

|

||||

Keep in mind that as pip is used in the same process as Open WebUI, the UI will be completely unresponsive during the installation.

|

||||

|

||||

No measures are taken to handle package conflicts with Open WebUI's requirements. That means that specifying requirements can break Open WebUI if you're not careful. You might be able to work around this by specifying `open-webui` itself as a requirement.

|

||||

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

"""

|

||||

title: myToolName

|

||||

author: myName

|

||||

funding_url: [any link here will be shown behind a `Heart` button for users to show their support to you]

|

||||

version: 1.0.0

|

||||

# the version is displayed in the UI to help users keep track of updates.

|

||||

license: GPLv3

|

||||

description: [recommended]

|

||||

requirements: package1>=2.7.0,package2,package3

|

||||

"""

|

||||

```

|

||||

|

||||

</details>

|

||||

@@ -1,377 +1,139 @@

|

||||

---

|

||||

sidebar_position: 2

|

||||

title: "⚙️ Tools"

|

||||

sidebar_position: 2

|

||||

title: "⚙️ Tools"

|

||||

---

|

||||

|

||||

## What are Tools?

|

||||

Tools are python scripts that are provided to an LLM at the time of the request. Tools allow LLMs to perform actions and receive additional context as a result. Generally speaking, your LLM of choice will need to support function calling for tools to be reliably utilized.

|

||||

# ⚙️ What are Tools?

|

||||

|

||||

Tools enable many use cases for chats, including web search, web scraping, and API interactions within the chat.

|

||||

Tools are small Python scripts that add superpowers to your LLM. When enabled, they allow your chatbot to do amazing things — like search the web, scrape data, generate images, talk back using AI voices, and more.

|

||||

|

||||

Many Tools are available to use on the [Community Website](https://openwebui.com/tools) and can easily be imported into your Open WebUI instance.

|

||||

Think of Tools as useful plugins that your AI can use when chatting with you.

|

||||

|

||||

## How can I use Tools?

|

||||

[Once installed](#how-to-install-tools), Tools can be used by assigning them to any LLM that supports function calling and then enabling that Tool. To assign a Tool to a model, you need to navigate to Workspace => Models. Here you can select the model for which you’d like to enable any Tools.

|

||||

---

|

||||

|

||||

Once you click the pencil icon to edit the model settings, scroll down to the Tools section and check any Tools you wish to enable. Once done you must click save.

|

||||

## 🚀 What Can Tools Help Me Do?

|

||||

|

||||

Now that Tools are enabled for the model, you can click the “+” icon when chatting with an LLM to use various Tools. Please keep in mind that enabling a Tool does not force it to be used. It means the LLM will be provided the option to call this Tool.

|

||||

Here are just a few examples of what Tools let your AI assistant do:

|

||||

|

||||

Lastly, we do provide a filter function on the community site that allows LLMs to autoselect Tools without you needing to enable them in the “+” icon menu: https://openwebui.com/f/hub/autotool_filter/

|

||||

|

||||

Please note: when using the AutoTool Filter, you will still need to take the steps above to enable the Tools per model.

|

||||

|

||||

## How to install Tools

|

||||

The Tools import process is quite simple. You will have two options:

|

||||

|

||||

### Download and import manually

|

||||

Navigate to the community site: https://openwebui.com/tools/

|

||||

1) Click on the Tool you wish to import

|

||||

2) Click the blue “Get” button in the top right-hand corner of the page

|

||||

3) Click “Download as JSON export”

|

||||

4) You can now upload the Tool into Open WebUI by navigating to Workspace => Tools and clicking “Import Tools”

|

||||

|

||||

### Import via your Open WebUI URL

|

||||

1) Navigate to the community site: https://openwebui.com/tools/

|

||||

2) Click on the Tool you wish to import

|

||||

3) Click the blue “Get” button in the top right-hand corner of the page

|

||||

4) Enter the IP address of your Open WebUI instance and click “Import to WebUI” which will automatically open your instance and allow you to import the Tool.

|

||||

|

||||

Note: You can install your own Tools and other Tools not tracked on the community site using the manual import method. Please do not import Tools you do not understand or are not from a trustworthy source. Running unknown code is ALWAYS a risk.

|

||||

|

||||

## What sorts of things can Tools do?

|

||||

Tools enable diverse use cases for interactive conversations by providing a wide range of functionality such as:

|

||||

|

||||

- [**Web Search**](https://openwebui.com/t/constliakos/web_search/): Perform live web searches to fetch real-time information.

|

||||

- [**Image Generation**](https://openwebui.com/t/justinrahb/image_gen/): Generate images based on the user prompt

|

||||

- [**External Voice Synthesis**](https://openwebui.com/t/justinrahb/elevenlabs_tts/): Make API requests within the chat to integrate external voice synthesis service ElevenLabs and generate audio based on the LLM output.

|

||||

|

||||

## Writing A Custom Toolkit

|

||||

|

||||

Toolkits are defined in a single Python file, with a top level docstring with metadata and a `Tools` class.

|

||||

|

||||

### Example Top-Level Docstring

|

||||

|

||||

```python

|

||||

"""

|

||||

title: String Inverse

|

||||

author: Your Name

|

||||

author_url: https://website.com

|

||||

git_url: https://github.com/username/string-reverse.git

|

||||

description: This tool calculates the inverse of a string

|

||||

required_open_webui_version: 0.4.0

|

||||

requirements: langchain-openai, langgraph, ollama, langchain_ollama

|

||||

version: 0.4.0

|

||||

licence: MIT

|

||||

"""

|

||||

```

|

||||

|

||||

### Tools Class

|

||||

|

||||

Tools have to be defined as methods within a class called `Tools`, with optional subclasses called `Valves` and `UserValves`, for example:

|

||||

|

||||

```python

|

||||

class Tools:

|

||||

def __init__(self):

|

||||

"""Initialize the Tool."""

|

||||

self.valves = self.Valves()

|

||||

|

||||

class Valves(BaseModel):

|

||||

api_key: str = Field("", description="Your API key here")

|

||||

|

||||

def reverse_string(self, string: str) -> str:

|

||||

"""

|

||||

Reverses the input string.

|

||||

:param string: The string to reverse

|

||||

"""

|

||||

# example usage of valves

|

||||

if self.valves.api_key != "42":

|

||||

return "Wrong API key"

|

||||

return string[::-1]

|

||||

```

|

||||

|

||||

### Type Hints

|

||||

Each tool must have type hints for arguments. As of version OpenWebUI version 0.4.3, the types may also be nested, such as `queries_and_docs: list[tuple[str, int]]`. Those type hints are used to generate the JSON schema that is sent to the model. Tools without type hints will work with a lot less consistency.

|

||||

|

||||

### Valves and UserValves - (optional, but HIGHLY encouraged)

|

||||

|

||||

Valves and UserValves are used to allow users to provide dynamic details such as an API key or a configuration option. These will create a fillable field or a bool switch in the GUI menu for the given function.

|

||||

|

||||

Valves are configurable by admins alone and UserValves are configurable by any users.

|

||||

|

||||

<details>

|

||||

<summary>Commented example</summary>

|

||||

|

||||

```

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

class Tools:

|

||||

# Notice the current indentation: Valves and UserValves must be declared as

|

||||

# attributes of a Tools, Filter or Pipe class. Here we take the

|

||||

# example of a Tool.

|

||||

class Valves(BaseModel):

|

||||

# Valves and UserValves inherit from pydantic's BaseModel. This

|

||||

# enables complex use cases like model validators etc.

|

||||

test_valve: int = Field( # Notice the type hint: it is used to

|

||||

# choose the kind of UI element to show the user (buttons,

|

||||

# texts, etc).

|

||||

default=4,

|

||||

description="A valve controlling a numberical value"

|

||||

# required=False, # you can enforce fields using True

|

||||

)

|

||||

pass

|

||||

# Note that this 'pass' helps for parsing and is recommended.

|

||||

|

||||

# UserValves are defined the same way.

|

||||

class UserValves(BaseModel):

|

||||

test_user_valve: bool = Field(

|

||||

default=False, description="A user valve controlling a True/False (on/off) switch"

|

||||

)

|

||||

pass

|

||||

|

||||

def __init__(self):

|

||||

self.valves = self.Valves()

|

||||

# Because they are set by the admin, they are accessible directly

|

||||

# upon code execution.

|

||||

pass

|

||||

|

||||

# The __user__ handling is the same for Filters, Tools and Functions.

|

||||

def test_the_tool(self, message: str, __user__: dict):

|

||||

"""

|

||||

This is a test tool. If the user asks you to test the tools, put any

|

||||

string you want in the message argument.

|

||||

|

||||

:param message: Any string you want.

|

||||

:return: The same string as input.

|

||||

"""

|

||||

# Because UserValves are defined per user they are only available

|

||||

# on use.

|

||||

# Note that although __user__ is a dict, __user__["valves"] is a

|

||||

# UserValves object. Hence you can access values like that:

|

||||

test_user_valve = __user__["valves"].test_user_valve

|

||||

# Or:

|

||||

test_user_valve = dict(__user__["valves"])["test_user_valve"]

|

||||

# But this will return the default value instead of the actual value:

|

||||

# test_user_valve = __user__["valves"]["test_user_valve"] # Do not do that!

|

||||

|

||||

return message + f"\nThe user valve set value is: {test_user_valve}"

|

||||

|

||||

```

|

||||

</details>

|

||||

|

||||

### Optional Arguments

|

||||

Below is a list of optional arguments your tools can depend on:

|

||||

- `__event_emitter__`: Emit events (see following section)

|

||||

- `__event_call__`: Same as event emitter but can be used for user interactions

|

||||

- `__user__`: A dictionary with user information. It also contains the `UserValves` object in `__user__["valves"]`.

|

||||

- `__metadata__`: Dictionary with chat metadata

|

||||

- `__messages__`: List of previous messages

|

||||

- `__files__`: Attached files

|

||||

- `__model__`: Model name

|

||||

|

||||

Just add them as argument to any method of your Tool class just like `__user__` in the example above.

|

||||

|

||||

### Event Emitters

|

||||

Event Emitters are used to add additional information to the chat interface. Similarly to Filter Outlets, Event Emitters are capable of appending content to the chat. Unlike Filter Outlets, they are not capable of stripping information. Additionally, emitters can be activated at any stage during the Tool.

|

||||

|

||||

There are two different types of Event Emitters:

|

||||

|

||||

If the model seems to be unable to call the tool, make sure it is enabled (either via the Model page or via the `+` sign next to the chat input field). You can also turn the `Function Calling` argument of the `Advanced Params` section of the Model page from `Default` to `Native`.

|

||||

|

||||

#### Status

|

||||

This is used to add statuses to a message while it is performing steps. These can be done at any stage during the Tool. These statuses appear right above the message content. These are very useful for Tools that delay the LLM response or process large amounts of information. This allows you to inform users what is being processed in real-time.

|

||||

|

||||

```

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status", # We set the type here

|

||||

"data": {"description": "Message that shows up in the chat", "done": False, "hidden": False},

|

||||

# Note done is False here indicating we are still emitting statuses

|

||||

}

|

||||

)

|

||||

```

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

async def test_function(

|

||||

self, prompt: str, __user__: dict, __event_emitter__=None

|

||||

) -> str:

|

||||

"""

|

||||

This is a demo

|

||||

|

||||

:param test: this is a test parameter

|

||||

"""

|

||||

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status", # We set the type here

|

||||

"data": {"description": "Message that shows up in the chat", "done": False},

|

||||

# Note done is False here indicating we are still emitting statuses

|

||||

}

|

||||

)

|

||||

|

||||

# Do some other logic here

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status",

|

||||

"data": {"description": "Completed a task message", "done": True, "hidden": False},

|

||||

# Note done is True here indicating we are done emitting statuses

|

||||

# You can also set "hidden": True if you want to remove the status once the message is returned

|

||||

}

|

||||

)

|

||||

|

||||

except Exception as e:

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status",

|

||||

"data": {"description": f"An error occured: {e}", "done": True},

|

||||

}

|

||||

)

|

||||

|

||||

return f"Tell the user: {e}"

|

||||

```

|

||||

</details>

|

||||

|

||||

#### Message

|

||||

This type is used to append a message to the LLM at any stage in the Tool. This means that you can append messages, embed images, and even render web pages before, or after, or during the LLM response.

|

||||

|

||||

```

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "message", # We set the type here

|

||||

"data": {"content": "This message will be appended to the chat."},

|

||||

# Note that with message types we do NOT have to set a done condition

|

||||

}

|

||||

)

|

||||

```

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

async def test_function(

|

||||

self, prompt: str, __user__: dict, __event_emitter__=None

|

||||

) -> str:

|

||||

"""

|

||||

This is a demo

|

||||

|

||||

:param test: this is a test parameter

|

||||

"""

|

||||

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "message", # We set the type here

|

||||

"data": {"content": "This message will be appended to the chat."},

|

||||

# Note that with message types we do NOT have to set a done condition

|

||||

}

|

||||

)

|

||||

|

||||

except Exception as e:

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "status",

|

||||

"data": {"description": f"An error occured: {e}", "done": True},

|

||||

}

|

||||

)

|

||||

|

||||

return f"Tell the user: {e}"

|

||||

```

|

||||

</details>

|

||||

|

||||

#### Citations

|

||||

This type is used to provide citations or references in the chat. You can utilize it to specify the content, the source, and any relevant metadata. Below is an example of how to emit a citation event:

|

||||

|

||||

```

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "citation",

|

||||

"data": {

|

||||

"document": [content],

|

||||

"metadata": [

|

||||

{

|

||||

"date_accessed": datetime.now().isoformat(),

|

||||

"source": title,

|

||||

}

|

||||

],

|

||||

"source": {"name": title, "url": url},

|

||||

},

|

||||

}

|

||||

)

|

||||

```

|

||||

If you are sending multiple citations, you can iterate over citations and call the emitter multiple times. When implementing custom citations, ensure that you set `self.citation = False` in your `Tools` class `__init__` method. Otherwise, the built-in citations will override the ones you have pushed in. For example:

|

||||

|

||||

```python

|

||||

def __init__(self):

|

||||

self.citation = False

|

||||

```

|

||||

|

||||

Warning: if you set `self.citation = True`, this will replace any custom citations you send with the automatically generated return citation. By disabling it, you can fully manage your own citation references.

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

class Tools:

|

||||

class UserValves(BaseModel):

|

||||

test: bool = Field(

|

||||

default=True, description="test"

|

||||

)

|

||||

|

||||

def __init__(self):

|

||||

self.citation = False

|

||||

|

||||

async def test_function(

|

||||

self, prompt: str, __user__: dict, __event_emitter__=None

|

||||

) -> str:

|

||||

"""

|

||||

This is a demo that just creates a citation

|

||||

|

||||

:param test: this is a test parameter

|

||||

"""

|

||||

|

||||

await __event_emitter__(

|

||||

{

|

||||

"type": "citation",

|

||||

"data": {

|

||||

"document": ["This message will be appended to the chat as a citation when clicked into"],

|

||||

"metadata": [

|

||||

{

|

||||

"date_accessed": datetime.now().isoformat(),

|

||||

"source": title,

|

||||

}

|

||||

],

|

||||

"source": {"name": "Title of the content", "url": "http://link-to-citation"},

|

||||

},

|

||||

}

|

||||

)

|

||||

```

|

||||

</details>

|

||||

|

||||

## External packages

|

||||

|

||||

In the Tools definition metadata you can specify custom packages. When you click `Save` the line will be parsed and `pip install` will be run on all requirements at once.

|

||||

|

||||

Keep in mind that as pip is used in the same process as Open-WebUI, the UI will be completely unresponsive during the installation.

|

||||

|

||||

No measures are taken to handle package conflicts with Open-WebUI's requirements. That means that specifying requirements can break OpenWebUI if you're not careful. You might be able to work around this by specifying `open-webui` itself as a requirement.

|

||||

|

||||

|

||||

<details>

|

||||

<summary>Example</summary>

|

||||

|

||||

```

|

||||

"""

|

||||

title: myToolName

|

||||

author: myName

|

||||

funding_url: [any link here will be shown behind a `Heart` button for users to show their support to you]

|

||||

version: 1.0.0

|

||||

# the version is displayed in the UI to help users keep track of updates.

|

||||

license: GPLv3

|

||||

description: [recommended]

|

||||

requirements: package1>=2.7.0,package2,package3

|

||||

"""

|

||||

```

|

||||

|

||||

</details>

|

||||

- 🌍 Web Search: Get real-time answers by searching the internet.

|

||||

- 🖼️ Image Generation: Create images from your prompts.

|

||||

- 🔊 Voice Output: Generate AI voices using ElevenLabs.

|

||||

|

||||

Explore ready-to-use tools here:

|

||||

🧰 [Tools Showcase](https://openwebui.com/tools)

|

||||

|

||||

---

|

||||

|

||||

|

||||

## 📦 How to Install Tools

|

||||

|

||||

There are two easy ways to install Tools in Open WebUI:

|

||||

|

||||

1. Go to [Community Tool Library](https://openwebui.com/tools)

|

||||

2. Choose a Tool, then click the Get button.

|

||||

3. Enter your Open WebUI instance’s IP address or URL.

|

||||

4. Click “Import to WebUI” — done!

|

||||

|

||||

🛑 Safety Tip: Never import a Tool you don’t recognize or trust. These are Python scripts and might run unsafe code.

|

||||

|

||||

---

|

||||

|

||||

|

||||

## 🔧 How to Use Tools in Open WebUI

|

||||

|

||||

Once you've installed Tools (we’ll show you how below), here’s how to enable and use them:

|

||||

|

||||

You have two ways to enable a Tool for your model:

|

||||

|

||||

### ➕ Option 1: Enable from the Chat Window

|

||||

|

||||

While chatting, click the ➕ icon in the input area. You’ll see a list of available Tools — you can enable any of them on the fly for that session.

|

||||

|

||||

💡 Tip: Enabling a Tool gives the model permission to use it — but it may not use it unless it's useful for the task.

|

||||

|

||||

### ✏️ Option 2: Enable by Default (Recommended for Frequent Use)

|

||||

1. Go to: Workspace ➡️ Models

|

||||

2. Choose the model you’re using (like GPT-4 or LLaMa2) and click the ✏️ edit icon.

|

||||

3. Scroll down to the “Tools” section.

|

||||

4. ✅ Check the Tools you want your model to have access to by default.

|

||||

5. Click Save.

|

||||

|

||||

This ensures the model always has these Tools ready to use whenever you chat with it.

|

||||

|

||||

You can also let your LLM auto-select the right Tools using the AutoTool Filter:

|

||||

|

||||

🔗 [AutoTool Filter](https://openwebui.com/f/hub/autotool_filter/)

|

||||

|

||||

🎯 Note: Even when using AutoTool, you still need to enable your Tools using Option 2.

|

||||

|

||||

✅ And that’s it — your LLM is now Tool-powered! You're ready to supercharge your chats with web search, image generation, voice output, and more.

|

||||

|

||||

---

|

||||

|

||||

## 🧠 Choosing How Tools Are Used: Default vs Native

|

||||

|

||||

Once Tools are enabled for your model, Open WebUI gives you two different ways to let your LLM use them in conversations.

|

||||

|

||||

You can decide how the model should call Tools by choosing between:

|

||||

|

||||

- 🟡 Default Mode (Prompt-based)

|

||||

- 🟢 Native Mode (Built-in function calling)

|

||||

|

||||

Let’s break it down:

|

||||

|

||||

### 🟡 Default Mode (Prompt-based Tool Triggering)

|

||||

|

||||

This is the default setting in Open WebUI.

|

||||

|

||||

Here, your LLM doesn’t need to natively support function calling. Instead, we guide the model using smart tool selection prompt template to select and use a Tool.

|

||||

|

||||

✅ Works with almost any model

|

||||

✅ Great way to unlock Tools with basic or local models

|

||||

❗ Not as reliable or flexible as Native Mode when chaining tools

|

||||

|

||||

### 🟢 Native Mode (Function Calling Built-In)

|

||||

|

||||

If your model does support “native” function calling (like GPT-4o or GPT-3.5-turbo-1106), you can use this powerful mode to let the LLM decide — in real time — when and how to call multiple Tools during a single chat message.

|

||||

|

||||

✅ Fast, accurate, and can chain multiple Tools in one response

|

||||

✅ The most natural and advanced experience

|

||||

❗ Requires a model that actually supports native function calling

|

||||

|

||||

### ✳️ How to Switch Between Modes

|

||||

|

||||

Want to enable native function calling in your chats? Here's how:

|

||||

|

||||

|

||||

|

||||

1. Open the chat window with your model.

|

||||

2. Click ⚙️ Chat Controls > Advanced Params.

|

||||

3. Look for the Function Calling setting and switch it from Default → Native

|

||||

|

||||

That’s it! Your chat is now using true native Tool support (as long as the model supports it).

|

||||

|

||||

➡️ We recommend using GPT-4o or another OpenAI model for the best native function-calling experience.

|

||||

🔎 Some local models may claim support, but often struggle with accurate or complex Tool usage.

|

||||

|

||||

💡 Summary:

|

||||

|

||||

| Mode | Who it’s for | Pros | Cons |

|

||||

|----------|----------------------------------|-----------------------------------------|--------------------------------------|

|

||||

| Default | Any model | Broad compatibility, safer, flexible | May be less accurate or slower |

|

||||

| Native | GPT-4o, etc. | Fast, smart, excellent tool chaining | Needs proper function call support |

|

||||

|

||||

Choose the one that works best for your setup — and remember, you can always switch on the fly via Chat Controls.

|

||||

|

||||

👏 And that's it — your LLM now knows how and when to use Tools, intelligently.

|

||||

|

||||

---

|

||||

|

||||

## 🧠 Summary

|

||||

|

||||

Tools are add-ons that help your AI model do much more than just chat. From answering real-time questions to generating images or speaking out loud — Tools bring your AI to life.

|

||||

|

||||

- Visit: [https://openwebui.com/tools](https://openwebui.com/tools) to discover new Tools.

|

||||

- Install them manually or with one-click.

|

||||

- Enable them per model from Workspace ➡️ Models.

|

||||

- Use them in chat by clicking ➕

|

||||

|

||||

Now go make your AI waaaaay smarter 🤖✨

|

||||

|

||||

@@ -6,8 +6,8 @@ title: "🖥️ Workspace"

|

||||

The Workspace in Open WebUI provides a comprehensive environment for managing your AI interactions and configurations. It consists of several key components:

|

||||

|

||||

- [🤖 Models](./models.md) - Create and manage custom models tailored to specific purposes

|

||||

- [📚 Knowledge](./knowledge.md) - Manage your knowledge bases for retrieval augmented generation

|

||||

- [📝 Prompts](./prompts.md) - Create and organize reusable prompts

|

||||

- [🧠 Knowledge](./knowledge.md) - Manage your knowledge bases for retrieval augmented generation

|

||||

- [📚 Prompts](./prompts.md) - Create and organize reusable prompts

|

||||

- [🔒 Permissions](./permissions.md) - Configure access controls and feature availability

|

||||

|

||||

Each section of the Workspace is designed to give you fine-grained control over your Open WebUI experience, allowing for customization and optimization of your AI interactions.

|

||||

|

||||

@@ -29,7 +29,7 @@ Some examples of what you might store in Knowledge:

|

||||

|

||||

### How to Use Knowledge in Chats

|

||||

|

||||

Accessing stored Knowledge in your chats is easy! By simply referencing what’s saved(using '#' before the name), Open WebUI can pull in data or follow specific guidelines that you’ve set up in the Knowledge section.

|

||||

Accessing stored Knowledge in your chats is easy! By simply referencing what’s saved (using '#' before the name), Open WebUI can pull in data or follow specific guidelines that you’ve set up in the Knowledge section.

|

||||

|

||||

For example:

|

||||

|

||||

|

||||

@@ -3,4 +3,61 @@ sidebar_position: 2

|

||||

title: "📚 Prompts"

|

||||

---

|

||||

|

||||

COMING SOON!

|

||||

The `Prompts` section of the `Workspace` within Open WebUI enables users to create, manage, and share custom prompts. This feature streamlines interactions with AI models by allowing users to save frequently used prompts and easily access them through slash commands.

|

||||

|

||||

### Prompt Management

|

||||

|

||||

The Prompts interface provides several key features for managing your custom prompts:

|

||||

|

||||

* **Create**: Design new prompts with customizable titles, access levels, and content.

|

||||

* **Share**: Share prompts with other users based on configured access permissions.

|

||||

* **Access Control**: Set visibility and usage permissions for each prompt (refer to [Permissions](./permissions.md) for more details).

|

||||

* **Slash Commands**: Quickly access prompts using custom slash commands during chat sessions.

|

||||

|

||||

### Creating and Editing Prompts

|

||||

|

||||

When creating or editing a prompt, you can configure the following settings:

|

||||

|

||||

* **Title**: Give your prompt a descriptive name for easy identification.

|

||||

* **Access**: Set the access level to control who can view and use the prompt.

|

||||

* **Command**: Define a slash command that will trigger the prompt (e.g., `/summarize`).

|

||||

* **Prompt Content**: Write the actual prompt text that will be sent to the model.

|

||||

|

||||

### Prompt Variables

|

||||

|

||||

Open WebUI supports dynamic prompt variables that can be included in your prompts:

|

||||

|

||||

* **Clipboard Content**: Use `{{CLIPBOARD}}` to insert content from your clipboard.

|

||||

* **Date and Time**:

|

||||

* `{{CURRENT_DATE}}`: Current date

|

||||

* `{{CURRENT_DATETIME}}`: Current date and time

|

||||

* `{{CURRENT_TIME}}`: Current time

|

||||

* `{{CURRENT_TIMEZONE}}`: Current timezone

|

||||

* `{{CURRENT_WEEKDAY}}`: Current day of the week

|

||||

* **User Information**:

|

||||

* `{{USER_NAME}}`: Current user's name

|

||||

* `{{USER_LANGUAGE}}`: User's selected language

|

||||

* `{{USER_LOCATION}}`: User's location (requires HTTPS and Settings > Interface toggle)

|

||||

|

||||

### Variable Usage Guidelines

|

||||

|

||||

* Enclose variables with double curly braces: `{{variable}}`

|

||||

* The `{{USER_LOCATION}}` variable requires:

|

||||

* A secure HTTPS connection

|

||||

* Enabling the feature in Settings > Interface

|

||||

* The `{{CLIPBOARD}}` variable requires clipboard access permission from your device

|

||||

|

||||

### Access Control and Permissions

|

||||

|

||||

Prompt management is controlled by the following permission settings:

|

||||

|

||||

* **Prompts Access**: Users need the `USER_PERMISSIONS_WORKSPACE_PROMPTS_ACCESS` permission to create and manage prompts.

|

||||

* For detailed information about configuring permissions, refer to the [Permissions documentation](./permissions.md).

|

||||

|

||||

### Best Practices

|

||||

|

||||

* Use clear, descriptive titles for your prompts

|

||||

* Create intuitive slash commands that reflect the prompt's purpose

|

||||

* Document any specific requirements or expected inputs in the prompt description

|

||||

* Test prompts with different variable combinations to ensure they work as intended

|

||||

* Consider access levels carefully when sharing prompts with other users - public sharing means that it will appear automatically for all users when they hit `/` in a chat, so you want to avoid creating too many.

|

||||

|

||||

@@ -1,116 +1,278 @@

|

||||

---

|

||||

sidebar_position: 5

|

||||

title: "🛠️ Development Guide"

|

||||

title: "🛠️ Local Development Guide"

|

||||

---

|

||||

|

||||

Welcome to the **Open WebUI Development Setup Guide!** Whether you're a novice or an experienced developer, this guide will help you set up a **local development environment** for both the frontend and backend components. Let’s dive in! 🚀

|

||||

# Ready to Contribute to Open WebUI? Let's Get Started! 🚀

|

||||

|

||||

## System Requirements

|

||||

Excited to dive into Open WebUI development? This comprehensive guide will walk you through setting up your **local development environment** quickly and easily. Whether you're a seasoned developer or just starting out, we'll get you ready to tweak the frontend, enhance the backend, and contribute to the future of Open WebUI! Let's get your development environment up and running in simple, detailed steps!

|

||||

|

||||

- **Operating System**: Linux (or WSL on Windows) or macOS

|

||||

- **Python Version**: Python 3.11+

|

||||

- **Node.js Version**: 22.10+

|

||||

## Prerequisites

|

||||

|

||||

## Development Methods

|

||||

Before you begin, ensure your system meets these minimum requirements:

|

||||

|

||||

### 🐧 Local Development Setup

|

||||

- **Operating System:** Linux (or WSL on Windows), Windows 11, or macOS. *(Recommended for best compatibility)*

|

||||

- **Python:** Version **3.11 or higher**. *(Required for backend services)*

|

||||

- **Node.js:** Version **22.10 or higher**. *(Required for frontend development)*

|

||||

- **IDE (Recommended):** We recommend using an IDE like [VSCode](https://code.visualstudio.com/) for code editing, debugging, and integrated terminal access. Feel free to use your favorite IDE if you have one!

|

||||

- **[Optional] GitHub Desktop:** For easier management of the Git repository, especially if you are less familiar with command-line Git, consider installing [GitHub Desktop](https://desktop.github.com/).

|

||||

|

||||

1. **Clone the Repository**:

|

||||

## Setting Up Your Local Environment

|

||||

|

||||

```bash

|

||||

git clone https://github.com/open-webui/open-webui.git

|

||||

cd open-webui

|

||||

```

|

||||

We'll set up both the frontend (user interface) and backend (API and server logic) of Open WebUI.

|

||||

|

||||

2. **Frontend Setup**:

|

||||

- Create a `.env` file:

|

||||

### 1. Clone the Repository

|

||||

|

||||

First, use `git clone` to download the Open WebUI repository to your local machine. This will create a local copy of the project on your computer.

|

||||

|

||||

1. **Open your terminal** (or Git Bash if you're on Windows and using Git Bash).

|

||||

2. **Navigate to the directory** where you want to store the Open WebUI project.

|

||||

3. **Clone the repository:** Run the following command:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/open-webui/open-webui.git

|

||||

cd open-webui

|

||||

```

|

||||

|

||||

The `git clone` command downloads the project files from GitHub. The `cd open-webui` command then navigates you into the newly created project directory.

|

||||

|

||||

### 2. Frontend Setup (User Interface)

|

||||

|

||||

Let's get the user interface (what you see in your browser) up and running first:

|

||||

|

||||

1. **Configure Environment Variables:**

|

||||

- Copy the example environment file to `.env`:

|

||||

|

||||

```bash

|

||||

cp -RPp .env.example .env

|

||||

```

|

||||

|

||||

- Install dependencies:

|

||||

This command copies the `.env.example` file to a new file named `.env`. The `.env` file is where you'll configure environment variables for the frontend.

|

||||

|

||||

- **Customize `.env`**: Open the `.env` file in your code editor (like VSCode). This file contains configuration variables for the frontend, such as API endpoints and other settings. For local development, the default settings in `.env.example` are usually sufficient to start with. However, you can customize them if needed.

|

||||

|

||||

**Important:** Do not commit sensitive information to `.env` if you are contributing back to the repository.

|

||||

|

||||

1. **Install Frontend Dependencies:**

|

||||

- **Navigate to the frontend directory:** If you're not already in the project root (`open-webui` directory), ensure you are there.

|

||||

|

||||

```bash

|

||||

# If you are not in the project root, run:

|

||||

cd open-webui

|

||||

```

|

||||

|

||||

- Install the required JavaScript packages:

|

||||

|

||||

```bash

|

||||

npm install

|

||||

```

|

||||

|

||||

- Start the frontend server:

|

||||

This command uses `npm` (Node Package Manager) to read the `package.json` file in the project root directory and download all the necessary JavaScript libraries and tools required for the frontend to run. This might take a few minutes depending on your internet connection.

|

||||

|

||||

2. **Start the Frontend Development Server:**

|

||||

|

||||

```bash

|

||||

npm run dev

|

||||

```

|

||||

|

||||

🌐 Available at: [http://localhost:5173](http://localhost:5173).

|

||||

This command launches the frontend development server. If the steps were followed successfully, it will usually indicate the server is running and provide a local URL.

|

||||

|

||||

3. **Backend Setup**:

|

||||

- Navigate to the backend:

|

||||

🎉 **Access the Frontend:** Open your web browser and go to [http://localhost:5173](http://localhost:5173). You should see a message indicating that Open WebUI's frontend is running and is waiting for the backend to be available. Don't worry about that message yet! Let's set up the backend next. **Keep this terminal running** – it's serving your frontend!

|

||||

|

||||

```bash

|

||||

cd backend

|

||||

```

|

||||

### 3. Backend Setup (API and Server)

|

||||

|

||||

- Use **Conda** for environment setup:

|

||||

For a smoother development experience, we **strongly recommend** using separate terminal instances for your frontend and backend processes. This keeps your workflows organized and makes it easier to manage each part of the application independently.

|

||||

|

||||

**Why Separate Terminals?**

|

||||

|

||||

- **Process Isolation:** The frontend and backend development servers are distinct programs. Running them in separate terminals ensures they don't interfere with each other and allows for independent restarts or stops.

|

||||

- **Clearer Logs and Output:** Each terminal will display the logs and output specific to either the frontend or backend. This makes debugging and monitoring much easier, as you're not sifting through interleaved logs.

|

||||

- **Reduced Terminal Clutter:** Mixing frontend and backend commands in a single terminal can become confusing. Separate terminals keep your command history and active processes organized.

|

||||

- **Improved Workflow Efficiency:** You can work on frontend tasks (like running `npm run dev`) in one terminal and simultaneously manage backend tasks (like starting the server or checking logs) in another, without having to switch contexts constantly within a single terminal.

|

||||

|

||||

**Using VSCode Integrated Terminals (Recommended):**

|

||||

|

||||

VSCode's integrated terminal feature makes managing multiple terminals incredibly easy. Here's how to leverage it for frontend and backend separation:

|

||||

|

||||

1. **Frontend Terminal (You likely already have this):** If you followed the Frontend Setup steps, you probably already have a terminal open in VSCode at the project root (`open-webui` directory). This is where you'll run your frontend commands (`npm run dev`, etc.). Ensure you are in the `open-webui` directory for the next steps if you are not already.

|

||||

|

||||

2. **Backend Terminal (Open a New One):**

|

||||

- In VSCode, go to **Terminal > New Terminal** (or use the shortcut `Ctrl+Shift+` on Windows/Linux or `Cmd+Shift+` on macOS). This will open a new integrated terminal panel.

|

||||

- **Navigate to the `backend` directory:** In this *new* terminal, use the `cd backend` command to change the directory to the `backend` folder within your project. This ensures all backend-related commands are executed in the correct context.

|

||||

|

||||

Now you have **two separate terminal instances within VSCode**: one for the frontend (likely in the `open-webui` directory) and one specifically for the backend (inside the `backend` directory). You can easily switch between these terminals within VSCode to manage your frontend and backend processes independently. This setup is highly recommended for a cleaner and more efficient development workflow.

|

||||

|

||||

**Backend Setup Steps (in your *backend* terminal):**

|

||||

|

||||

1. **Navigate to the Backend Directory:** (You should already be in the `backend` directory in your *new* terminal from the previous step). If not, run:

|

||||

|

||||

```bash

|

||||

cd backend

|

||||

```

|

||||

|

||||

2. **Create and Activate a Conda Environment (Recommended):**

|

||||

- We highly recommend using Conda to manage Python dependencies and isolate your project environment. This prevents conflicts with other Python projects on your system and ensures you have the correct Python version and libraries.

|

||||

|

||||

```bash

|

||||

conda create --name open-webui python=3.11

|

||||

conda activate open-webui

|

||||

```

|

||||

|

||||

- Install dependencies:

|

||||

- `conda create --name open-webui python=3.11`: This command creates a new Conda environment named `open-webui` using Python version 3.11. If you chose a different Python 3.11.x version, that's fine.

|

||||

- `conda activate open-webui`: This command activates the newly created Conda environment. Once activated, your terminal prompt will usually change to indicate you are in the `open-webui` environment (e.g., it might show `(open-webui)` at the beginning of the line).

|

||||

|

||||

**Make sure you activate the environment in your backend terminal before proceeding.**

|

||||

|

||||

*(Using Conda is optional but strongly recommended for managing Python dependencies and avoiding conflicts.)* If you choose not to use Conda, ensure you are using Python 3.11 or higher and proceed to the next step, but be aware of potential dependency conflicts.

|

||||

|

||||

1. **Install Backend Dependencies:**

|

||||

- In your *backend* terminal (and with the Conda environment activated if you are using Conda), run:

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt -U

|

||||

```

|

||||

|

||||

- Start the backend:

|

||||

This command uses `pip` (Python Package Installer) to read the `requirements.txt` file in the `backend` directory. `requirements.txt` lists all the Python libraries that the backend needs to run. `pip install` downloads and installs these libraries into your active Python environment (your Conda environment if you are using it, or your system-wide Python environment otherwise). The `-U` flag ensures you get the latest compatible versions of the libraries.

|

||||

|

||||

2. **Start the Backend Development Server:**

|

||||

- In your *backend* terminal, run:

|

||||

|

||||

```bash

|

||||

sh dev.sh

|

||||

```

|

||||

|

||||

📄 API docs available at: [http://localhost:8080/docs](http://localhost:8080/docs).

|

||||

This command executes the `dev.sh` script. This script likely contains the command to start the backend development server. *(You can open and examine the `dev.sh` file in your code editor to see the exact command being run if you are curious.)* The backend server will usually start and print some output to the terminal.

|

||||

|

||||

📄 **Explore the API Documentation:** Once the backend is running, you can access the automatically generated API documentation in your web browser at [http://localhost:8080/docs](http://localhost:8080/docs). This documentation is incredibly valuable for understanding the backend API endpoints, how to interact with the backend, and what data it expects and returns. Keep this documentation handy as you develop!

|

||||

|

||||

## 🐛 Troubleshooting

|

||||

🎉 **Congratulations!** If you have followed all the steps, you should now have both the frontend and backend development servers running locally. Go back to your browser tab where you accessed the frontend (usually [http://localhost:5173](http://localhost:5173)). **Refresh the page.** You should now see the full Open WebUI application running in your browser, connected to your local backend!

|

||||

|

||||

### **FATAL ERROR: Reached Heap Limit**

|

||||

## Troubleshooting Common Issues

|

||||

|

||||

If you encounter memory-related errors during the build, increase the **Node.js heap size**:

|

||||

Here are solutions to some common problems you might encounter during setup or development:

|

||||

|

||||

1. **Modify Dockerfile**:

|

||||

### 💥 "FATAL ERROR: Reached Heap Limit" (Frontend)

|

||||

|

||||

```dockerfile

|

||||

ENV NODE_OPTIONS=--max-old-space-size=4096

|

||||

```

|

||||

This error, often seen during frontend development, indicates that Node.js is running out of memory during the build process, especially when working with large frontend applications.

|

||||

|

||||

2. **Allocate at least 4 GB of RAM** to Node.js.

|

||||

**Solution:** Increase the Node.js heap size. This gives Node.js more memory to work with. You have a couple of options:

|

||||

|

||||

---

|

||||

1. **Using `NODE_OPTIONS` Environment Variable (Recommended for Development):**

|

||||

- This is a temporary way to increase the memory limit for the current terminal session. Before running `npm run dev` or `npm run build` in your *frontend* terminal, set the `NODE_OPTIONS` environment variable:

|

||||

|

||||

### **Other Issues**

|

||||

```bash

|

||||

export NODE_OPTIONS="--max-old-space-size=4096" # For Linux/macOS (bash, zsh)

|

||||

# set NODE_OPTIONS=--max-old-space-size=4096 # For Windows (Command Prompt)

|

||||

# $env:NODE_OPTIONS="--max-old-space-size=4096" # For Windows (PowerShell)

|

||||

npm run dev

|

||||

```

|

||||

|

||||

- **Port Conflicts**:

|

||||

Ensure that no other processes are using **ports 8080 or 5173**.

|

||||

Choose the command appropriate for your operating system and terminal. `4096` represents 4GB of memory. You can try increasing this value further if needed (e.g., `8192` for 8GB). This setting will only apply to commands run in the current terminal session.

|

||||

|

||||

- **Hot Reload Not Working**:

|

||||

Verify that **watch mode** is enabled for both frontend and backend.

|

||||

2. **Modifying `Dockerfile` (For Dockerized Environments):**

|

||||

- If you are working with Docker, you can permanently set the `NODE_OPTIONS` environment variable within your `Dockerfile`. This is useful for consistent memory allocation in Dockerized environments, as shown in the original guide example:

|

||||

|

||||

```dockerfile

|

||||

ENV NODE_OPTIONS=--max-old-space-size=4096

|

||||

```

|

||||

|

||||

- **Allocate Sufficient RAM:** Regardless of the method, ensure your system or Docker container has enough RAM available for Node.js to use. **At least 4 GB of RAM is recommended**, and more might be needed for larger projects or complex builds. Close unnecessary applications to free up RAM.

|

||||

|

||||

### ⚠️ Port Conflicts (Frontend & Backend)

|

||||

|

||||

If you see errors related to ports, such as "Address already in use" or "Port already bound," it means another application on your system is already using port `5173` (default for frontend) or `8080` (default for backend). Only one application can use a specific port at a time.

|

||||

|

||||

**Solution:**

|

||||

|

||||

1. **Identify the Conflicting Process:** You need to find out which application is using the port you need.

|

||||

- **Linux/macOS:** Open a new terminal and use the `lsof` or `netstat` commands:

|

||||

- `lsof -i :5173` (or `:8080` for the backend port)

|

||||

- `netstat -tulnp | grep 5173` (or `8080`)

|

||||

These commands will list the process ID (PID) and the name of the process using the specified port.

|

||||

- **Windows:** Open Command Prompt or PowerShell as an administrator and use `netstat` or `Get-NetTCPConnection`:

|

||||