Co-authored-by: Bo Liu <benjaminliu.eecs@gmail.com> Co-authored-by: Haoyu Lu <ruclhy1998@163.com> |

||

|---|---|---|

| deepseek_vl | ||

| images | ||

| .editorconfig | ||

| .flake8 | ||

| .gitattributes | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| .pylintrc | ||

| cli_chat.py | ||

| inference.py | ||

| LICENSE-CODE | ||

| LICENSE-MODEL | ||

| Makefile | ||

| pyproject.toml | ||

| README.md | ||

| requirements.txt | ||

Model Download | Quick Start | License | Citation

1. Introduction

Introducing DeepSeek-VL, an open-source Vision-Language (VL) Model designed for real-world vision and language understanding applications. DeepSeek-VL possesses general multimodal understanding capabilities, capable of processing logical diagrams, web pages, formula recognition, scientific literature, natural images, and embodied intelligence in complex scenarios.

DeepSeek-VL: Towards Real-World Vision-Language Understanding

Haoyu Lu*, Wen Liu*, Bo Zhang**, Bingxuan Wang, Kai Dong, Bo Liu, Jingxiang Sun, Tongzheng Ren, Zhuoshu Li, Hao Yang, Yaofeng Sun, Chengqi Deng, Hanwei Xu, Zhenda Xie, Chong Ruan (*Equal Contribution, **Project Lead)

2. Release

✅ 2024-03-13: Support DeepSeek-VL gradio demo.

✅ 2024-03-11: DeepSeek-VL family released, including DeepSeek-VL-7B-base, DeepSeek-VL-7B-chat, DeepSeek-VL-1.3B-base, and DeepSeek-VL-1.3B-chat.

The release includes a diverse set of models tailored for various applications within the DeepSeek-VL family. The models come in two sizes: 7B and 1.3B parameters, each offering base and chat variants to cater to different needs and integration scenarios.

3. Model Downloads

We release the DeepSeek-VL family, including 1.3B-base, 1.3B-chat, 7b-base and 7b-chat models, to the public. To support a broader and more diverse range of research within both academic and commercial communities. Please note that the use of this model is subject to the terms outlined in License section. Commercial usage is permitted under these terms.

Huggingface

| Model | Sequence Length | Download |

|---|---|---|

| DeepSeek-VL-1.3B-base | 4096 | 🤗 Hugging Face |

| DeepSeek-VL-1.3B-chat | 4096 | 🤗 Hugging Face |

| DeepSeek-VL-7B-base | 4096 | 🤗 Hugging Face |

| DeepSeek-VL-7B-chat | 4096 | 🤗 Hugging Face |

4. Quick Start

Installation

On the basis of Python >= 3.8 environment, install the necessary dependencies by running the following command:

pip install -e .

Simple Inference Example

import torch

from transformers import AutoModelForCausalLM

from deepseek_vl.models import VLChatProcessor, MultiModalityCausalLM

from deepseek_vl.utils.io import load_pil_images

# specify the path to the model

model_path = "deepseek-ai/deepseek-vl-7b-chat"

vl_chat_processor: VLChatProcessor = VLChatProcessor.from_pretrained(model_path)

tokenizer = vl_chat_processor.tokenizer

vl_gpt: MultiModalityCausalLM = AutoModelForCausalLM.from_pretrained(model_path, trust_remote_code=True)

vl_gpt = vl_gpt.to(torch.bfloat16).cuda().eval()

conversation = [

{

"role": "User",

"content": "<image_placeholder>Describe each stage of this image.",

"images": ["./images/training_pipelines.jpg"]

},

{

"role": "Assistant",

"content": ""

}

]

# load images and prepare for inputs

pil_images = load_pil_images(conversation)

prepare_inputs = vl_chat_processor(

conversations=conversation,

images=pil_images,

force_batchify=True

).to(vl_gpt.device)

# run image encoder to get the image embeddings

inputs_embeds = vl_gpt.prepare_inputs_embeds(**prepare_inputs)

# run the model to get the response

outputs = vl_gpt.language_model.generate(

inputs_embeds=inputs_embeds,

attention_mask=prepare_inputs.attention_mask,

pad_token_id=tokenizer.eos_token_id,

bos_token_id=tokenizer.bos_token_id,

eos_token_id=tokenizer.eos_token_id,

max_new_tokens=512,

do_sample=False,

use_cache=True

)

answer = tokenizer.decode(outputs[0].cpu().tolist(), skip_special_tokens=True)

print(f"{prepare_inputs['sft_format'][0]}", answer)

CLI Chat

python cli_chat.py --model_path "deepseek-ai/deepseek-vl-7b-chat"

# or local path

python cli_chat.py --model_path "local model path"

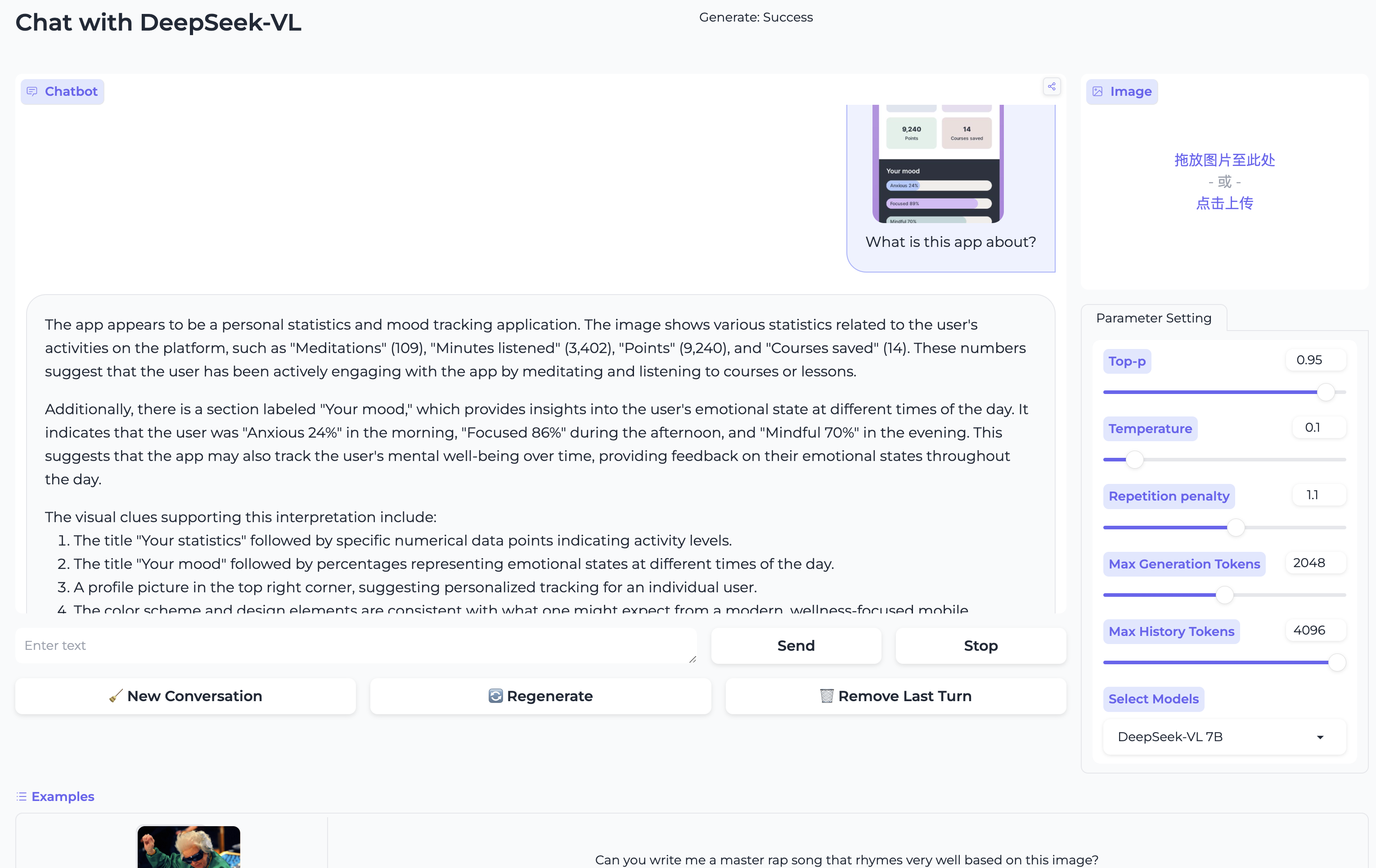

Gradio Demo

pip install -e .[gradio]

python deepseek_vl/serve/app_deepseek.py

Have Fun!

5. License

This code repository is licensed under the MIT License. The use of DeepSeek-VL Base/Chat models is subject to DeepSeek Model License. DeepSeek-VL series (including Base and Chat) supports commercial use.

6. Citation

@misc{lu2024deepseekvl,

title={DeepSeek-VL: Towards Real-World Vision-Language Understanding},

author={Haoyu Lu and Wen Liu and Bo Zhang and Bingxuan Wang and Kai Dong and Bo Liu and Jingxiang Sun and Tongzheng Ren and Zhuoshu Li and Hao Yang and Yaofeng Sun and Chengqi Deng and Hanwei Xu and Zhenda Xie and Chong Ruan},

year={2024},

eprint={2403.05525},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

7. Contact

If you have any questions, please raise an issue or contact us at service@deepseek.com.