7.1 KiB

| title |

|---|

| PyTorch |

:::tip If you are not already using ClearML, see Getting Started for setup instructions. :::

ClearML integrates seamlessly with PyTorch, automatically logging its models.

All you have to do is simply add two lines of code to your PyTorch script:

from clearml import Task

task = Task.init(task_name="<task_name>", project_name="<project_name>")

And that’s it! This creates a ClearML Task which captures:

- Source code and uncommitted changes

- Installed packages

- PyTorch models

- TensorBoard outputs

- Console output

- General details such as machine details, runtime, creation date etc.

- And more

You can view all the task details in the WebApp.

Automatic Logging Control

By default, when ClearML is integrated into your PyTorch script, it captures PyTorch models. But, you may want to have more control over what your experiment logs.

To control a task's framework logging, use the auto_connect_frameworks parameter of Task.init().

Completely disable all automatic logging by setting the parameter to False. For finer grained control of logged

frameworks, input a dictionary, with framework-boolean pairs.

For example:

auto_connect_frameworks={

'pytorch': False, 'matplotlib': True, 'tensorboard': False, 'tensorflow': False,

'xgboost': False, 'scikit': True, 'fastai': True, 'lightgbm': False,

'hydra': True, 'detect_repository': True, 'tfdefines': True, 'joblib': True,

'megengine': True, 'jsonargparse': True, 'catboost': True

}

You can also input wildcards as dictionary values, so ClearML will log a model created by a framework only if its local path matches at least one wildcard.

For example, in the code below, ClearML will log PyTorch models only if their paths have the .pt extension. The

unspecified frameworks' values default to true so all their models are automatically logged.

auto_connect_frameworks={'pytorch' : '*.pt'}

Manual Logging

To augment its automatic logging, ClearML also provides an explicit logging interface.

See more information about explicitly logging information to a ClearML Task:

- Models

- Configuration (e.g. parameters, configuration files)

- Artifacts (e.g. output files or python objects created by a task)

- Scalars

- Text/Plots/Debug Samples

See Explicit Reporting Tutorial.

Distributed Training

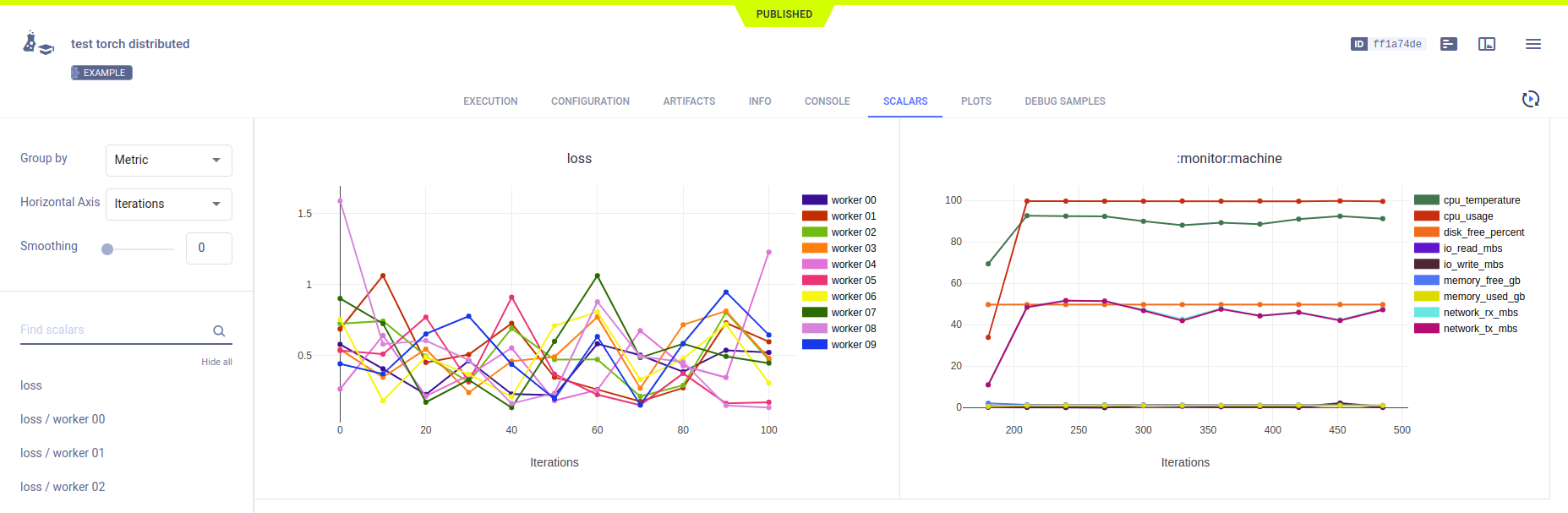

You can integrate ClearML into your distributed training script. With ClearML, you can easily log information from each subprocess to a centralized location and visualize it.

The PyTorch Distributed script demonstrates using ClearML with the PyTorch Distributed Communications Package (torch.distributed): a ClearML task is initialized before the subprocesses are spawned, and then each subprocess manually reports its artifacts, scalars, and hyperparameters to the task.

Examples

Take a look at ClearML's PyTorch examples. The examples use PyTorch and ClearML in different configurations with additional tools, like argparse, TensorBoard, and matplotlib:

- PyTorch MNIST - Demonstrates ClearML automatically logging models created with PyTorch, and

argparsecommand line parameters - PyTorch with Matplotlib - Demonstrates ClearML’s automatic logging PyTorch models and matplotlib images. The images are stored in the resulting ClearML experiment's Debug Samples

- PyTorch with TensorBoard - Demonstrates ClearML automatically logging PyTorch models, and scalars, debug samples, and text logged using TensorBoard's

SummaryWriter - PyTorch TensorBoard Toy - Demonstrates ClearML automatically logging debug samples logged using TensorBoard's

SummaryWriter - PyTorch TensorBoardX - Demonstrates ClearML automatically logging PyTorch models, and scalars, debug samples, and text logged using TensorBoardX's

SummaryWriter - PyTorch Abseil - Demonstrates ClearML automatically logging PyTorch models and

absl.flagsparameters - PyTorch Model Updating - Demonstrates training, logging, and updating a PyTorch model using ClearML's OutputModel class

- PyTorch Distributed - Demonstrates using ClearML with the PyTorch Distributed Communications Package (torch.distributed)

Remote Execution

ClearML logs all the information required to reproduce an experiment on a different machine (installed packages, uncommitted changes etc.). The ClearML Agent listens to designated queues and when a task is enqueued, the agent pulls it, recreates its execution environment, and runs it, reporting its scalars, plots, etc. to the experiment manager.

Deploy a ClearML Agent onto any machine (e.g. a cloud VM, a local GPU machine, your own laptop) by simply running the following command on it:

clearml-agent daemon --queue <queues_to_listen_to> [--docker]

Use the ClearML Autoscalers, to help you manage cloud workloads in the cloud of your choice (AWS, GCP, Azure) and automatically deploy ClearML agents: the autoscaler automatically spins up and shuts down instances as needed, according to a resource budget that you set.

Cloning, Editing, and Enqueuing

Use ClearML's web interface to edit task details, like configuration parameters or input models, then execute the task with the new configuration on a remote machine:

- Clone the experiment

- Edit the hyperparameters and/or other details

- Enqueue the task

The ClearML Agent executing the task will use the new values to override any hard coded values.

Executing a Task Remotely

You can set a task to be executed remotely programmatically by adding Task.execute_remotely()

to your script. This method stops the current local execution of the task, and then enqueues it to a specified queue to

re-run it on a remote machine.

# If executed locally, process will terminate, and a copy will be executed by an agent instead

task.execute_remotely(queue_name='default', exit_process=True)