mirror of

https://github.com/open-webui/docs

synced 2025-06-16 11:28:36 +00:00

Merge branch 'open-webui:main' into main

This commit is contained in:

@@ -1,6 +1,6 @@

|

||||

---

|

||||

sidebar_position: 3

|

||||

title: "Chat Parameters"

|

||||

title: "⚙️ Chat Parameters"

|

||||

---

|

||||

|

||||

Within Open WebUI, there are three levels to setting a **System Prompt** and **Advanced Parameters**: per-chat basis, per-model basis, and per-account basis. This hierarchical system allows for flexibility while maintaining structured administration and control.

|

||||

|

||||

35

docs/features/conversation-organization.md

Normal file

35

docs/features/conversation-organization.md

Normal file

@@ -0,0 +1,35 @@

|

||||

---

|

||||

sidebar_position: 4

|

||||

title: "🗂️ Organizing Conversations"

|

||||

---

|

||||

|

||||

Open WebUI provides powerful organization features that help users manage their conversations. You can easily categorize and tag conversations, making it easier to find and retrieve them later. The two primary ways to organize conversations are through **Folders** and **Tags**.

|

||||

|

||||

## Organizing Conversations with Folders

|

||||

|

||||

Folders allow you to group related conversations together for quick access and better organization.

|

||||

|

||||

- **Creating a Folder**: You can create a new folder to store specific conversations. This is useful if you want to keep conversations of a similar topic or purpose together.

|

||||

- **Moving Conversations into Folders**: Conversations can be moved into folders by dragging and dropping them. This allows you to structure your workspace in a way that suits your workflow.

|

||||

|

||||

|

||||

|

||||

### Example Use Case

|

||||

|

||||

:::tip **Organizing by Project**

|

||||

If you are managing multiple projects, you can create separate folders for each project and move relevant conversations into these folders. This helps keep all project-related discussions in one place.

|

||||

:::

|

||||

|

||||

## Tagging Conversations

|

||||

|

||||

Tags provide an additional layer of organization by allowing you to label conversations with keywords or phrases.

|

||||

|

||||

- **Adding Tags to Conversations**: Tags can be applied to conversations based on their content or purpose. Tags are flexible and can be added or removed as needed.

|

||||

|

||||

- **Using Tags for Searching**: Tags make it easy to locate specific conversations by using the search feature. You can filter conversations by tags to quickly find those related to specific topics.

|

||||

|

||||

### Example Use Case

|

||||

|

||||

:::tip **Tagging by Topic**

|

||||

If you frequently discuss certain topics, such as "marketing" or "development," you can tag conversations with these terms. Later, when you search for a specific tag, all relevant conversations will be quickly accessible.

|

||||

:::

|

||||

@@ -1,6 +1,6 @@

|

||||

---

|

||||

sidebar_position: 6

|

||||

title: "Image Generation"

|

||||

title: "🎨 Image Generation"

|

||||

---

|

||||

|

||||

# Image Generation

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

---

|

||||

sidebar_position: 3

|

||||

title: "Ollama Load Balancing"

|

||||

title: "⚖️ Ollama Load Balancing"

|

||||

---

|

||||

|

||||

# Ollama Load Balancing Setup

|

||||

|

||||

94

docs/features/url-params.md

Normal file

94

docs/features/url-params.md

Normal file

@@ -0,0 +1,94 @@

|

||||

---

|

||||

sidebar_position: 3

|

||||

title: "URL Parameters"

|

||||

---

|

||||

|

||||

In Open WebUI, chat sessions can be customized through various URL parameters. These parameters allow you to set specific configurations, enable features, and define model settings on a per-chat basis. This approach provides flexibility and control over individual chat sessions directly from the URL.

|

||||

|

||||

## URL Parameter Overview

|

||||

|

||||

The following table lists the available URL parameters, their function, and example usage.

|

||||

|

||||

| **Parameter** | **Description** | **Example** |

|

||||

|-----------------------|----------------------------------------------------------------------------------|--------------------------------------------------------|

|

||||

| `models` | Specifies the models to be used, as a comma-separated list. | `/?models=model1,model2` |

|

||||

| `model` | Specifies a single model to be used for the chat session. | `/?model=model1` |

|

||||

| `youtube` | Specifies a YouTube video ID to be transcribed within the chat. | `/?youtube=VIDEO_ID` |

|

||||

| `web-search` | Enables web search functionality if set to `true`. | `/?web-search=true` |

|

||||

| `tools` or `tool-ids` | Specifies a comma-separated list of tool IDs to activate in the chat. | `/?tools=tool1,tool2` |

|

||||

| `call` | Enables a call overlay if set to `true`. | `/?call=true` |

|

||||

| `q` | Sets an initial query or prompt for the chat. | `/?q=Hello%20there` |

|

||||

| `temporary-chat` | Marks the chat as temporary if set to `true`, for one-time sessions. | `/?temporary-chat=true` |

|

||||

|

||||

### 1. **Models and Model Selection**

|

||||

|

||||

- **Description**: The `models` and `model` parameters allow you to specify which [language models](/features/workspace/models.md) should be used for a particular chat session.

|

||||

- **How to Set**: You can use either `models` for multiple models or `model` for a single model.

|

||||

- **Example**:

|

||||

- `/?models=model1,model2` – This initializes the chat with `model1` and `model2`.

|

||||

- `/?model=model1` – This sets `model1` as the sole model for the chat.

|

||||

|

||||

### 2. **YouTube Transcription**

|

||||

|

||||

- **Description**: The `youtube` parameter takes a YouTube video ID, enabling the chat to transcribe the specified video.

|

||||

- **How to Set**: Use the YouTube video ID as the value for this parameter.

|

||||

- **Example**: `/?youtube=VIDEO_ID`

|

||||

- **Behavior**: This triggers transcription functionality within the chat for the provided YouTube video.

|

||||

|

||||

### 3. **Web Search**

|

||||

|

||||

- **Description**: Enabling `web-search` allows the chat session to access [web search](/features/web_search) functionality.

|

||||

- **How to Set**: Set this parameter to `true` to enable web search.

|

||||

- **Example**: `/?web-search=true`

|

||||

- **Behavior**: If enabled, the chat can retrieve web search results as part of its responses.

|

||||

|

||||

### 4. **Tool Selection**

|

||||

|

||||

- **Description**: The `tools` or `tool-ids` parameters specify which [tools](/features/plugin/tools) to activate within the chat.

|

||||

- **How to Set**: Provide a comma-separated list of tool IDs as the parameter’s value.

|

||||

- **Example**: `/?tools=tool1,tool2` or `/?tool-ids=tool1,tool2`

|

||||

- **Behavior**: Each tool ID is matched and activated within the session for user interaction.

|

||||

|

||||

### 5. **Call Overlay**

|

||||

|

||||

- **Description**: The `call` parameter enables a video or call overlay in the chat interface.

|

||||

- **How to Set**: Set the parameter to `true` to enable the call overlay.

|

||||

- **Example**: `/?call=true`

|

||||

- **Behavior**: Activates a call interface overlay, allowing features such as live transcription and video input.

|

||||

|

||||

### 6. **Initial Query Prompt**

|

||||

|

||||

- **Description**: The `q` parameter allows setting an initial query or prompt for the chat.

|

||||

- **How to Set**: Specify the query or prompt text as the parameter value.

|

||||

- **Example**: `/?q=Hello%20there`

|

||||

- **Behavior**: The chat starts with the specified prompt, automatically submitting it as the first message.

|

||||

|

||||

### 7. **Temporary Chat Sessions**

|

||||

|

||||

- **Description**: The `temporary-chat` parameter marks the chat as a temporary session. This may limit features such as saving chat history or applying persistent settings.

|

||||

- **How to Set**: Set this parameter to `true` for a temporary chat session.

|

||||

- **Example**: `/?temporary-chat=true`

|

||||

- **Behavior**: This initiates a disposable chat session without saving history or applying advanced configurations.

|

||||

|

||||

<details>

|

||||

<summary>Example Use Case</summary>

|

||||

:::tip **Temporary Chat Session**

|

||||

Suppose a user wants to initiate a quick chat session without saving the history. They can do so by setting `temporary-chat=true` in the URL. This provides a disposable chat environment ideal for one-time interactions.

|

||||

:::

|

||||

</details>

|

||||

|

||||

## Using Multiple Parameters Together

|

||||

|

||||

These URL parameters can be combined to create highly customized chat sessions. For example:

|

||||

```bash

|

||||

/chat?models=model1,model2&youtube=VIDEO_ID&web-search=true&tools=tool1,tool2&call=true&q=Hello%20there&temporary-chat=true

|

||||

```

|

||||

|

||||

This URL will:

|

||||

- Initialize the chat with `model1` and `model2`.

|

||||

- Enable YouTube transcription, web search, and specified tools.

|

||||

- Display a call overlay.

|

||||

- Set an initial prompt of "Hello there."

|

||||

- Mark the chat as temporary, avoiding any history saving.

|

||||

|

||||

|

||||

@@ -351,6 +351,11 @@ requests initiated by third-party websites, but only over HTTPS.

|

||||

- Description: Sets the timeout in seconds for internal aiohttp connections. This impacts things

|

||||

such as connections to Ollama and OpenAI endpoints.

|

||||

|

||||

### `AIOHTTP_CLIENT_TIMEOUT_OPENAI_MODEL_LIST`

|

||||

|

||||

- Type: `int`

|

||||

- Description: Sets the timeout in seconds for fetching the OpenAI model list. This can be useful in cases where network latency requires a longer timeout duration to successfully retrieve the model list.

|

||||

|

||||

#### `FONTS_DIR`

|

||||

|

||||

- Type: `str`

|

||||

|

||||

@@ -11,33 +11,33 @@ Explore deeper concepts and advanced configurations of Open WebUI to enhance you

|

||||

|

||||

## 🔧 Environment Configuration

|

||||

Understand how to set environment variables to customize your Open WebUI setup.

|

||||

[Environment Configuration Guide](./env-configuration)

|

||||

[Environment Configuration Guide](/getting-started/advanced-topics/env-configuration)

|

||||

|

||||

---

|

||||

|

||||

## 📊 Logging and Monitoring

|

||||

Learn how to monitor, log, and troubleshoot your system effectively.

|

||||

[Logging and Monitoring Guide](./logging)

|

||||

[Logging and Monitoring Guide](/getting-started/advanced-topics/logging)

|

||||

|

||||

---

|

||||

|

||||

## 🛠️ Development Guide

|

||||

Dive into the development process and learn how to contribute to Open WebUI.

|

||||

[Development Guide](./development)

|

||||

[Development Guide](/getting-started/advanced-topics/development)

|

||||

|

||||

---

|

||||

|

||||

## 🔒 HTTPS Encryption

|

||||

Ensure secure communication by implementing HTTPS encryption in your deployment.

|

||||

[HTTPS Encryption Guide](./https-encryption)

|

||||

[HTTPS Encryption Guide](/getting-started/advanced-topics/https-encryption)

|

||||

|

||||

---

|

||||

|

||||

## 🔗 API Endpoints

|

||||

Get essential information for API integration and automation using our models.

|

||||

[API Endpoints Guide](./api-endpoints)

|

||||

[API Endpoints Guide](/getting-started/advanced-topics/api-endpoints)

|

||||

|

||||

---

|

||||

|

||||

Looking for installation instructions? Head over to our [Quick Start Guide](../quick-start).

|

||||

Need to explore core features? Check out [Using OpenWebUI](../using-openwebui).

|

||||

Looking for installation instructions? Head over to our [Quick Start Guide](/getting-started/quick-start).

|

||||

Need to explore core features? Check out [Using OpenWebUI](/getting-started/using-openwebui).

|

||||

|

||||

@@ -21,7 +21,7 @@ import CondaWindows from './tab-python/CondaWindows.md';

|

||||

|

||||

<TopBanners />

|

||||

|

||||

## How to Install ⏱️

|

||||

# How to Install ⏱️

|

||||

|

||||

:::info **Important Note on User Roles and Privacy:**

|

||||

|

||||

@@ -33,42 +33,79 @@ import CondaWindows from './tab-python/CondaWindows.md';

|

||||

|

||||

Choose your preferred installation method below:

|

||||

|

||||

- **Docker:** Recommended for most users due to ease of setup and flexibility.

|

||||

- **Kubernetes:** Ideal for enterprise deployments that require scaling and orchestration.

|

||||

- **Python:** Suitable for low-resource environments or those wanting a manual setup.

|

||||

- **Docker:** **Officially supported and recommended for most users**

|

||||

- **Python:** Suitable for low-resource environments or those wanting a manual setup

|

||||

- **Kubernetes:** Ideal for enterprise deployments that require scaling and orchestration

|

||||

|

||||

|

||||

<Tabs>

|

||||

<TabItem value="docker" label="Docker">

|

||||

|

||||

<Tabs>

|

||||

<TabItem value="docker-compose" label="Docker Compose">

|

||||

<DockerCompose />

|

||||

<DataStorage />

|

||||

<DockerUpdating />

|

||||

<TabItem value="docker" label="Docker">

|

||||

<div className='mt-5'>

|

||||

<ManualDocker />

|

||||

<DataStorage />

|

||||

<DockerUpdating />

|

||||

</div>

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="compose" label="Docker Compose">

|

||||

<div className='mt-5'>

|

||||

<DockerCompose />

|

||||

</div>

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="podman" label="Podman">

|

||||

<Podman />

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="manual-docker" label="Manual Docker">

|

||||

<ManualDocker />

|

||||

<DataStorage />

|

||||

<DockerUpdating />

|

||||

<div className='mt-5'>

|

||||

<Podman />

|

||||

</div>

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="swarm" label="Docker Swarm">

|

||||

<DockerSwarm />

|

||||

<div className='mt-5'>

|

||||

<DockerSwarm />

|

||||

</div>

|

||||

</TabItem>

|

||||

|

||||

</Tabs>

|

||||

|

||||

</TabItem>

|

||||

<TabItem value="python" label="Python">

|

||||

<Tabs>

|

||||

<TabItem value="conda" label="Conda">

|

||||

<div className='mt-5'>

|

||||

<div className='text-lg font-semibold mb-1'>Choose Your Platform</div>

|

||||

<Tabs groupId="platform-conda">

|

||||

<TabItem value="unix-conda" label="Linux/macOS">

|

||||

<CondaUnix />

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="windows-conda" label="Windows">

|

||||

<CondaWindows />

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

</div>

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="venv" label="Venv">

|

||||

<div className='mt-5'>

|

||||

<Venv />

|

||||

</div>

|

||||

</TabItem>

|

||||

|

||||

|

||||

<TabItem value="development" label="Development">

|

||||

<div className='mt-5'>

|

||||

<h3>Development Setup</h3>

|

||||

<p>

|

||||

For developers who want to contribute, check the Development Guide in <a href="/getting-started/advanced-topics">Advanced Topics</a>.

|

||||

</p>

|

||||

</div>

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="kubernetes" label="Kubernetes">

|

||||

|

||||

<Tabs>

|

||||

|

||||

<TabItem value="helm" label="Helm">

|

||||

<Helm />

|

||||

</TabItem>

|

||||

@@ -76,41 +113,10 @@ Choose your preferred installation method below:

|

||||

<TabItem value="kustomize" label="Kustomize">

|

||||

<Kustomize />

|

||||

</TabItem>

|

||||

|

||||

</Tabs>

|

||||

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="python" label="Python">

|

||||

|

||||

<Tabs>

|

||||

|

||||

<TabItem value="venv" label="Venv">

|

||||

<Venv />

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="conda" label="Conda">

|

||||

<h3>Choose Your Platform</h3>

|

||||

<Tabs groupId="platform-conda">

|

||||

<TabItem value="unix-conda" label="Linux/macOS">

|

||||

<CondaUnix />

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="windows-conda" label="Windows">

|

||||

<CondaWindows />

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="development" label="Development">

|

||||

<h3>Development Setup</h3>

|

||||

<p>

|

||||

For developers who want to contribute, check the Development Guide in <a href="../advanced-topics">Advanced Topics</a>.

|

||||

</p>

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

</TabItem>

|

||||

|

||||

|

||||

<TabItem value="third-party" label="Third Party">

|

||||

<Tabs>

|

||||

<TabItem value="pinokio-computer" label="Pinokio.computer">

|

||||

@@ -148,8 +154,3 @@ Need help? Have questions? Join our community:

|

||||

|

||||

Stay updated with the latest features, troubleshooting tips, and announcements!

|

||||

|

||||

## Conclusion

|

||||

|

||||

Thank you for choosing Open WebUI! We are committed to providing a powerful, privacy-focused interface for your LLM needs. If you encounter any issues, refer to the [Troubleshooting Guide](../../troubleshooting/index.mdx).

|

||||

|

||||

Happy exploring! 🎉

|

||||

|

||||

@@ -1,42 +1,26 @@

|

||||

## Updating

|

||||

|

||||

To update your local Docker installation to the latest version, you can either use **Watchtower** or manually update the container.

|

||||

|

||||

# Docker Compose Setup

|

||||

|

||||

Using Docker Compose simplifies the management of multi-container Docker applications.

|

||||

|

||||

## Example `docker-compose.yml`

|

||||

|

||||

```yaml

|

||||

version: '3'

|

||||

services:

|

||||

openwebui:

|

||||

image: ghcr.io/open-webui/open-webui:main

|

||||

ports:

|

||||

- "3000:8080"

|

||||

volumes:

|

||||

- open-webui:/app/backend/data

|

||||

volumes:

|

||||

open-webui:

|

||||

```

|

||||

|

||||

## Starting the Services

|

||||

|

||||

To start your services, run:

|

||||

|

||||

### Option 1: Using Watchtower

|

||||

With [Watchtower](https://containrrr.dev/watchtower/), you can automate the update process:

|

||||

```bash

|

||||

docker compose up -d

|

||||

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

|

||||

```

|

||||

_(Replace `open-webui` with your container's name if it's different.)_

|

||||

|

||||

---

|

||||

### Option 2: Manual Update

|

||||

1. Stop and remove the current container:

|

||||

```bash

|

||||

docker rm -f open-webui

|

||||

```

|

||||

2. Pull the latest version:

|

||||

```bash

|

||||

docker pull ghcr.io/open-webui/open-webui:main

|

||||

```

|

||||

3. Start the container again:

|

||||

```bash

|

||||

docker run -d -p 3000:8080 -v open-webui:/app/backend/data --name open-webui ghcr.io/open-webui/open-webui:main

|

||||

```

|

||||

|

||||

**Note:** For Nvidia GPU support, add the following to your service definition:

|

||||

|

||||

```yaml

|

||||

deploy:

|

||||

resources:

|

||||

reservations:

|

||||

devices:

|

||||

- driver: nvidia

|

||||

count: all

|

||||

capabilities: [gpu]

|

||||

```

|

||||

Both methods will get your Docker instance updated and running with the latest build.

|

||||

@@ -1,24 +1,12 @@

|

||||

|

||||

# Manual Docker Setup

|

||||

|

||||

If you prefer to set up Docker manually, follow these steps.

|

||||

|

||||

## Step 1: Pull the Open WebUI Image

|

||||

|

||||

```bash

|

||||

docker pull ghcr.io/open-webui/open-webui:main

|

||||

```

|

||||

|

||||

## Step 2: Run the Container

|

||||

|

||||

# Docker Setup

|

||||

```bash

|

||||

docker run -d -p 3000:8080 -v open-webui:/app/backend/data --name open-webui ghcr.io/open-webui/open-webui:main

|

||||

```

|

||||

**Note:** For Nvidia GPU support, add `--gpus all`. You can also use the `:cuda` tag for CUDA or `:ollama` for the bundled Ollama variant.

|

||||

|

||||

**Note:** For Nvidia GPU support, add `--gpus all` to the `docker run` command.

|

||||

For the latest bleeding-edge features, with potential bugs or occasional instability, use the `:dev` tag:

|

||||

```bash

|

||||

docker run -d -p 3000:8080 -v open-webui:/app/backend/data --name open-webui ghcr.io/open-webui/open-webui:dev

|

||||

```

|

||||

|

||||

## Access the WebUI

|

||||

|

||||

After the container is running, access Open WebUI at:

|

||||

|

||||

[http://localhost:3000](http://localhost:3000)

|

||||

Access Open WebUI at: [http://localhost:3000](http://localhost:3000)

|

||||

72

docs/tutorials/integrations/langfuse

Normal file

72

docs/tutorials/integrations/langfuse

Normal file

@@ -0,0 +1,72 @@

|

||||

---

|

||||

sidebar_position: 20

|

||||

title: "Monitoring and debugging with Langfuse"

|

||||

---

|

||||

|

||||

# Langfuse Integration with OpenWebUI

|

||||

|

||||

[Langfuse](https://langfuse.com/) ([GitHub](https://github.com/langfuse/langfuse)) offers open source observability and evaluations for OpenWebUI. By enabling the Langfuse integration, you can trace your application data with Langfuse to develop, monitor, and improve the use of OpenWebUI, including:

|

||||

|

||||

- Application [traces](https://langfuse.com/docs/tracing)

|

||||

- Usage patterns

|

||||

- Cost data by user and model

|

||||

- Replay sessions to debug issues

|

||||

- [Evaluations](https://langfuse.com/docs/scores/overview)

|

||||

|

||||

## How to integrate Langfuse with OpenWebUI:

|

||||

|

||||

|

||||

_Langfuse integration steps_

|

||||

|

||||

[Pipelines](https://github.com/open-webui/pipelines/) in OpenWebUi is an UI-agnostic framework for OpenAI API plugins. It enables the injection of plugins that intercept, process, and forward user prompts to the final LLM, allowing for enhanced control and customization of prompt handling.

|

||||

|

||||

To trace your application data with Langfuse, you can use the [Langfuse pipeline](https://github.com/open-webui/pipelines/blob/d4fca4c37c4b8603be7797245e749e9086f35130/examples/filters/langfuse_filter_pipeline.py), which enables real-time monitoring and analysis of message interactions.

|

||||

|

||||

## Quick Start Guide

|

||||

|

||||

### Step 1: Setup OpenWebUI

|

||||

|

||||

Make sure to have OpenWebUI running. To do so, have a look at the [OpenWebUI documentation](https://docs.openwebui.com/).

|

||||

|

||||

### Step 2: Set Up Pipelines

|

||||

|

||||

Launch [Pipelines](https://github.com/open-webui/pipelines/) by using Docker. Use the following command to start Pipelines:

|

||||

|

||||

```bash

|

||||

docker run -p 9099:9099 --add-host=host.docker.internal:host-gateway -v pipelines:/app/pipelines --name pipelines --restart always ghcr.io/open-webui/pipelines:main

|

||||

```

|

||||

|

||||

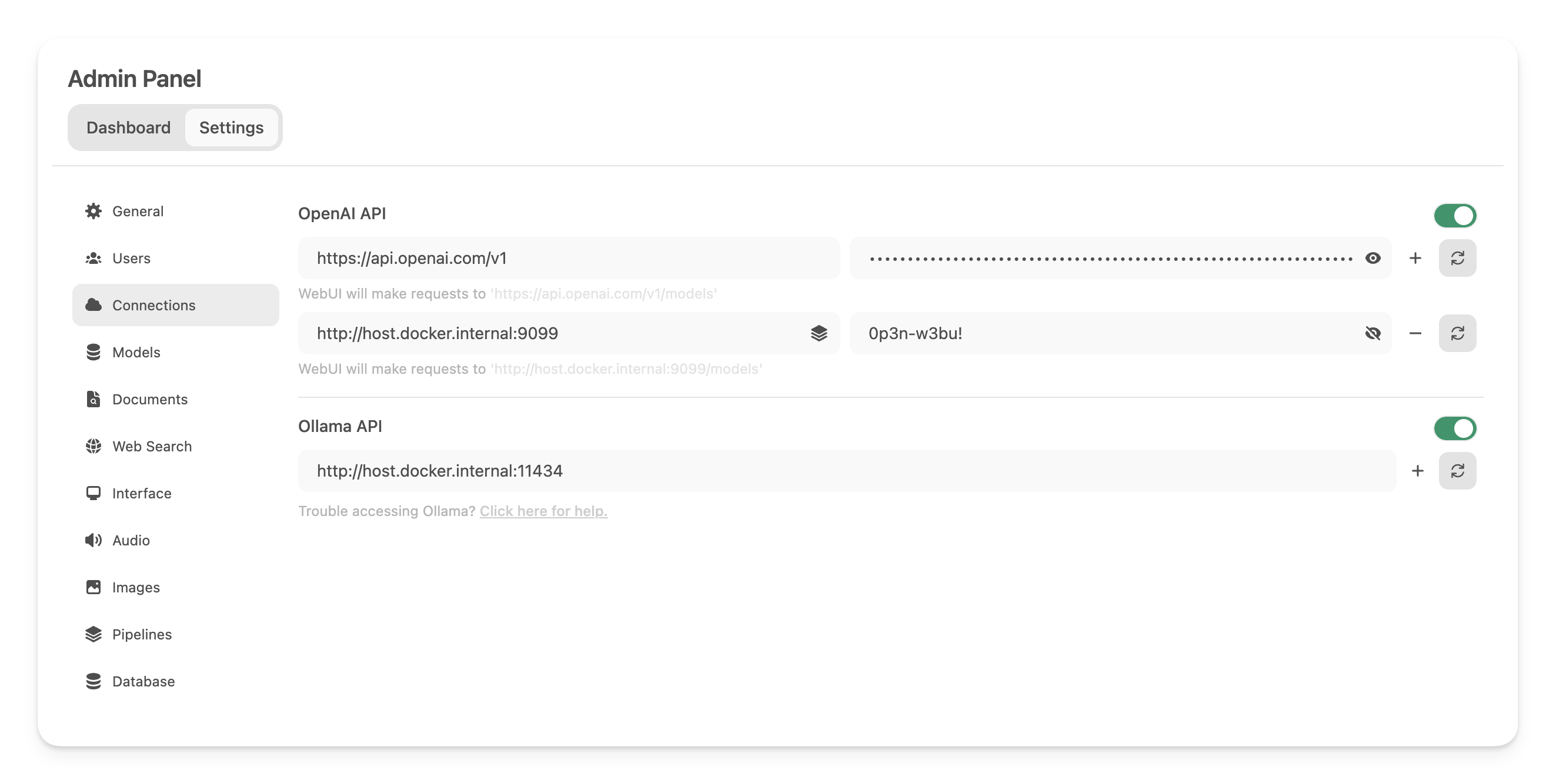

### Step 3: Connecting OpenWebUI with Pipelines

|

||||

|

||||

In the _Admin Settings_, create and save a new connection of type OpenAI API with the following details:

|

||||

|

||||

- **URL:** http://host.docker.internal:9099 (this is where the previously launched Docker container is running).

|

||||

- **Password:** 0p3n-w3bu! (standard password)

|

||||

|

||||

|

||||

|

||||

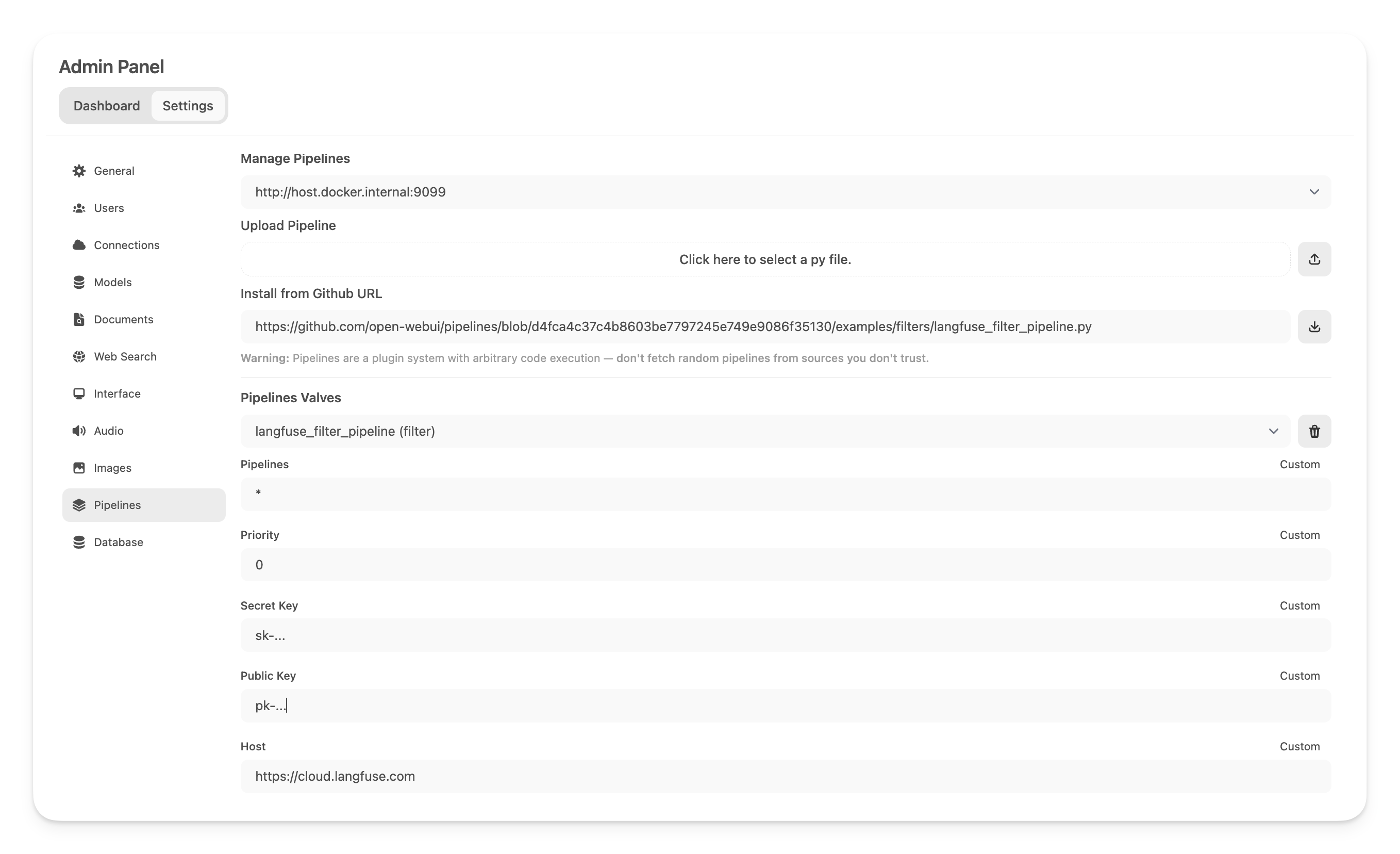

### Step 4: Adding the Langfuse Filter Pipeline

|

||||

|

||||

Next, navigate to _Admin Settings_ -> _Pipelines_ and add the Langfuse Filter Pipeline. Specify that Pipelines is listening on http://host.docker.internal:9099 (as configured earlier) and install the [Langfuse Filter Pipeline](https://github.com/open-webui/pipelines/blob/main/examples/filters/langfuse_filter_pipeline.py) by using the _Install from Github URL_ option with the following URL:

|

||||

|

||||

```

|

||||

https://github.com/open-webui/pipelines/blob/main/examples/filters/langfuse_filter_pipeline.py

|

||||

```

|

||||

|

||||

Now, add your Langfuse API keys below. If you haven't signed up to Langfuse yet, you can get your API keys by creating an account [here](https://cloud.langfuse.com).

|

||||

|

||||

|

||||

|

||||

_**Note:** Capture usage (token counts) for OpenAi models while streaming is enabled, you have to navigate to the model settings in OpenWebUI and check the "Usage" [box](https://github.com/open-webui/open-webui/discussions/5770#discussioncomment-10778586) below *Capabilities*._

|

||||

|

||||

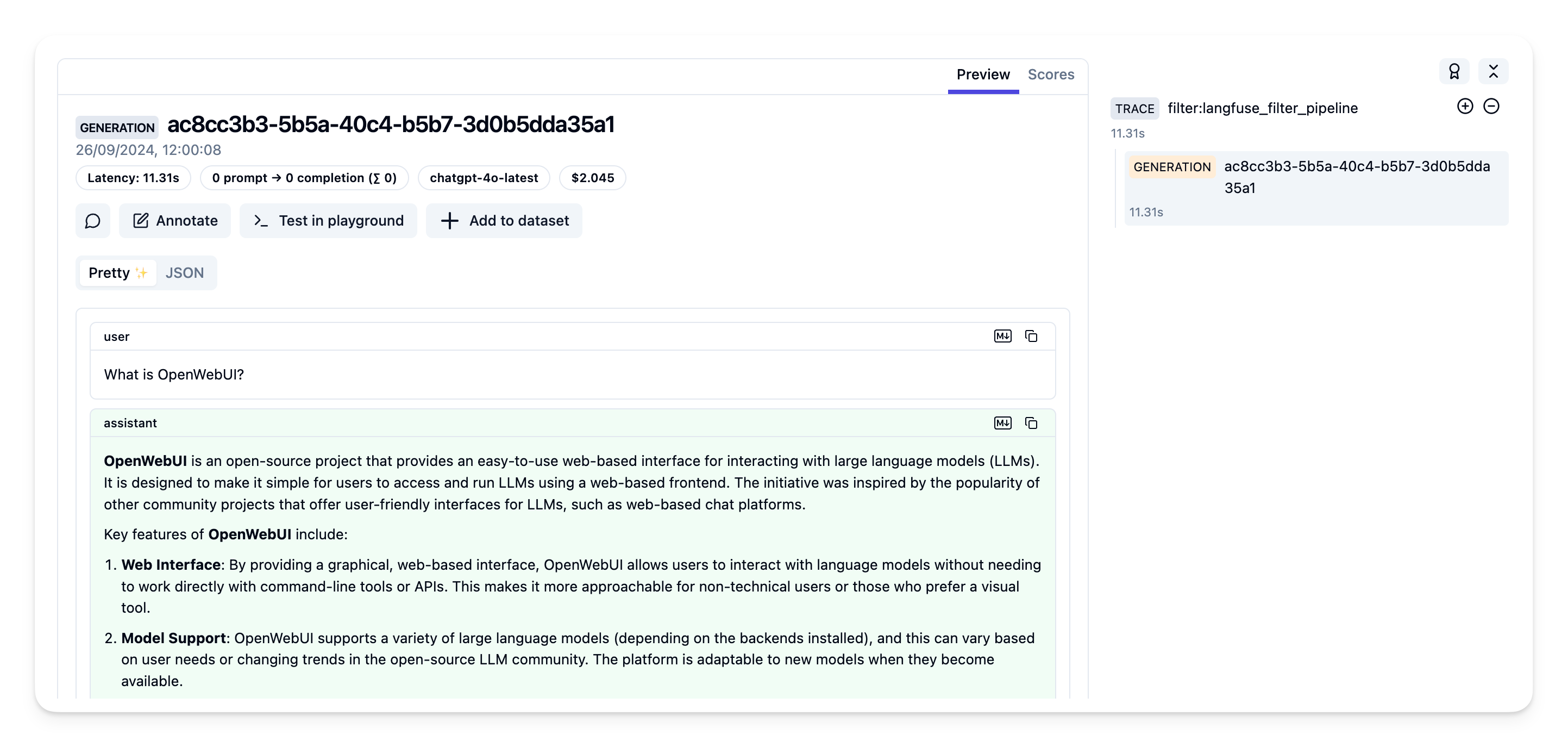

### Step 5: See your traces in Langfuse

|

||||

|

||||

You can now interact with your OpenWebUI application and see the traces in Langfuse.

|

||||

|

||||

[Example trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/904a8c1f-4974-4f8f-8a2f-129ae78d99c5?observation=fe5b127b-e71c-45ab-8ee5-439d4c0edc28) in the Langfuse UI:

|

||||

|

||||

|

||||

|

||||

## Learn more

|

||||

|

||||

For a comprehensive guide on OpenWebUI Pipelines, visit [this post](https://ikasten.io/2024/06/03/getting-started-with-openwebui-pipelines/).

|

||||

BIN

static/img/folder-demo.gif

Normal file

BIN

static/img/folder-demo.gif

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 370 KiB |

BIN

static/img/tag-demo.gif

Normal file

BIN

static/img/tag-demo.gif

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 284 KiB |

Reference in New Issue

Block a user