mirror of

https://github.com/open-webui/docs

synced 2025-06-16 11:28:36 +00:00

Merge pull request #495 from Classic298/main

chore: rename openwebui -> Open WebUI and small changes to OpenAI Quick Start Guide

This commit is contained in:

commit

9215594498

@ -27,7 +27,7 @@ Integration Steps

|

||||

docker run -p 5001:5001 -e DOCLING_SERVE_ENABLE_UI=true quay.io/docling-project/docling-serve

|

||||

```

|

||||

|

||||

### Step 2: Configure OpenWebUI to use Docling

|

||||

### Step 2: Configure Open WebUI to use Docling

|

||||

|

||||

* Log in to your Open WebUI instance.

|

||||

* Navigate to the `Admin Panel` settings menu.

|

||||

|

||||

@ -31,7 +31,7 @@ Integration Steps

|

||||

* Go to `API Keys` or `https://console.mistral.ai/api-keys`

|

||||

* Create a new key and make sure to copy it

|

||||

|

||||

### Step 3: Configure OpenWebUI to use Mistral OCR

|

||||

### Step 3: Configure Open WebUI to use Mistral OCR

|

||||

|

||||

* Log in to your Open WebUI instance.

|

||||

* Navigate to the `Admin Panel` settings menu.

|

||||

|

||||

@ -50,7 +50,7 @@ class Tools:

|

||||

```

|

||||

|

||||

### Type Hints

|

||||

Each tool must have type hints for arguments. As of version OpenWebUI version 0.4.3, the types may also be nested, such as `queries_and_docs: list[tuple[str, int]]`. Those type hints are used to generate the JSON schema that is sent to the model. Tools without type hints will work with a lot less consistency.

|

||||

Each tool must have type hints for arguments. As of version Open WebUI version 0.4.3, the types may also be nested, such as `queries_and_docs: list[tuple[str, int]]`. Those type hints are used to generate the JSON schema that is sent to the model. Tools without type hints will work with a lot less consistency.

|

||||

|

||||

### Valves and UserValves - (optional, but HIGHLY encouraged)

|

||||

|

||||

@ -312,9 +312,9 @@ async def test_function(

|

||||

|

||||

In the Tools definition metadata you can specify custom packages. When you click `Save` the line will be parsed and `pip install` will be run on all requirements at once.

|

||||

|

||||

Keep in mind that as pip is used in the same process as Open-WebUI, the UI will be completely unresponsive during the installation.

|

||||

Keep in mind that as pip is used in the same process as Open WebUI, the UI will be completely unresponsive during the installation.

|

||||

|

||||

No measures are taken to handle package conflicts with Open-WebUI's requirements. That means that specifying requirements can break OpenWebUI if you're not careful. You might be able to work around this by specifying `open-webui` itself as a requirement.

|

||||

No measures are taken to handle package conflicts with Open WebUI's requirements. That means that specifying requirements can break Open WebUI if you're not careful. You might be able to work around this by specifying `open-webui` itself as a requirement.

|

||||

|

||||

|

||||

<details>

|

||||

|

||||

@ -13,20 +13,20 @@ Open WebUI makes it easy to connect and use OpenAI and other OpenAI-compatible A

|

||||

|

||||

## Step 1: Get Your OpenAI API Key

|

||||

|

||||

To use OpenAI models (such as GPT-4 or GPT-3.5), you need an API key from a supported provider.

|

||||

To use OpenAI models (such as GPT-4 or o3-mini), you need an API key from a supported provider.

|

||||

|

||||

You can use:

|

||||

|

||||

- OpenAI directly (https://platform.openai.com/account/api-keys)

|

||||

- Azure OpenAI

|

||||

- An OpenAI-compatible service (e.g., LocalAI, FastChat, Helicone, etc.)

|

||||

- Any OpenAI-compatible service (e.g., LocalAI, FastChat, Helicone, LiteLLM, OpenRouter etc.)

|

||||

|

||||

👉 Once you have the key, copy it and keep it handy.

|

||||

|

||||

For most OpenAI usage, the default API base URL is:

|

||||

https://api.openai.com/v1

|

||||

|

||||

Other providers may use different URLs — check your provider’s documentation.

|

||||

Other providers use different URLs — check your provider’s documentation.

|

||||

|

||||

---

|

||||

|

||||

@ -38,7 +38,7 @@ Once Open WebUI is running:

|

||||

2. Navigate to **Connections > OpenAI > Manage** (look for the wrench icon).

|

||||

3. Click ➕ **Add New Connection**.

|

||||

4. Fill in the following:

|

||||

- API URL: https://api.openai.com/v1

|

||||

- API URL: https://api.openai.com/v1 (or the URL of your specific provider)

|

||||

- API Key: Paste your key here

|

||||

|

||||

5. Click Save ✅.

|

||||

@ -61,7 +61,7 @@ Here’s what model selection looks like:

|

||||

|

||||

|

||||

|

||||

Simply choose GPT-4, GPT-3.5, or any compatible model offered by your provider.

|

||||

Simply choose GPT-4, o3-mini, or any compatible model offered by your provider.

|

||||

|

||||

---

|

||||

|

||||

|

||||

@ -3,9 +3,9 @@ sidebar_position: 20

|

||||

title: "💥 Monitoring and Debugging with Langfuse"

|

||||

---

|

||||

|

||||

# Langfuse Integration with OpenWebUI

|

||||

# Langfuse Integration with Open WebUI

|

||||

|

||||

[Langfuse](https://langfuse.com/) ([GitHub](https://github.com/langfuse/langfuse)) offers open source observability and evaluations for OpenWebUI. By enabling the Langfuse integration, you can trace your application data with Langfuse to develop, monitor, and improve the use of OpenWebUI, including:

|

||||

[Langfuse](https://langfuse.com/) ([GitHub](https://github.com/langfuse/langfuse)) offers open source observability and evaluations for Open WebUI. By enabling the Langfuse integration, you can trace your application data with Langfuse to develop, monitor, and improve the use of Open WebUI, including:

|

||||

|

||||

- Application [traces](https://langfuse.com/docs/tracing)

|

||||

- Usage patterns

|

||||

@ -13,20 +13,20 @@ title: "💥 Monitoring and Debugging with Langfuse"

|

||||

- Replay sessions to debug issues

|

||||

- [Evaluations](https://langfuse.com/docs/scores/overview)

|

||||

|

||||

## How to integrate Langfuse with OpenWebUI

|

||||

## How to integrate Langfuse with Open WebUI

|

||||

|

||||

|

||||

_Langfuse integration steps_

|

||||

|

||||

[Pipelines](https://github.com/open-webui/pipelines/) in OpenWebUi is an UI-agnostic framework for OpenAI API plugins. It enables the injection of plugins that intercept, process, and forward user prompts to the final LLM, allowing for enhanced control and customization of prompt handling.

|

||||

[Pipelines](https://github.com/open-webui/pipelines/) in Open WebUI is an UI-agnostic framework for OpenAI API plugins. It enables the injection of plugins that intercept, process, and forward user prompts to the final LLM, allowing for enhanced control and customization of prompt handling.

|

||||

|

||||

To trace your application data with Langfuse, you can use the [Langfuse pipeline](https://github.com/open-webui/pipelines/blob/d4fca4c37c4b8603be7797245e749e9086f35130/examples/filters/langfuse_filter_pipeline.py), which enables real-time monitoring and analysis of message interactions.

|

||||

|

||||

## Quick Start Guide

|

||||

|

||||

### Step 1: Setup OpenWebUI

|

||||

### Step 1: Setup Open WebUI

|

||||

|

||||

Make sure to have OpenWebUI running. To do so, have a look at the [OpenWebUI documentation](https://docs.openwebui.com/).

|

||||

Make sure to have Open WebUI running. To do so, have a look at the [Open WebUI documentation](https://docs.openwebui.com/).

|

||||

|

||||

### Step 2: Set Up Pipelines

|

||||

|

||||

@ -36,14 +36,14 @@ Launch [Pipelines](https://github.com/open-webui/pipelines/) by using Docker. Us

|

||||

docker run -p 9099:9099 --add-host=host.docker.internal:host-gateway -v pipelines:/app/pipelines --name pipelines --restart always ghcr.io/open-webui/pipelines:main

|

||||

```

|

||||

|

||||

### Step 3: Connecting OpenWebUI with Pipelines

|

||||

### Step 3: Connecting Open WebUI with Pipelines

|

||||

|

||||

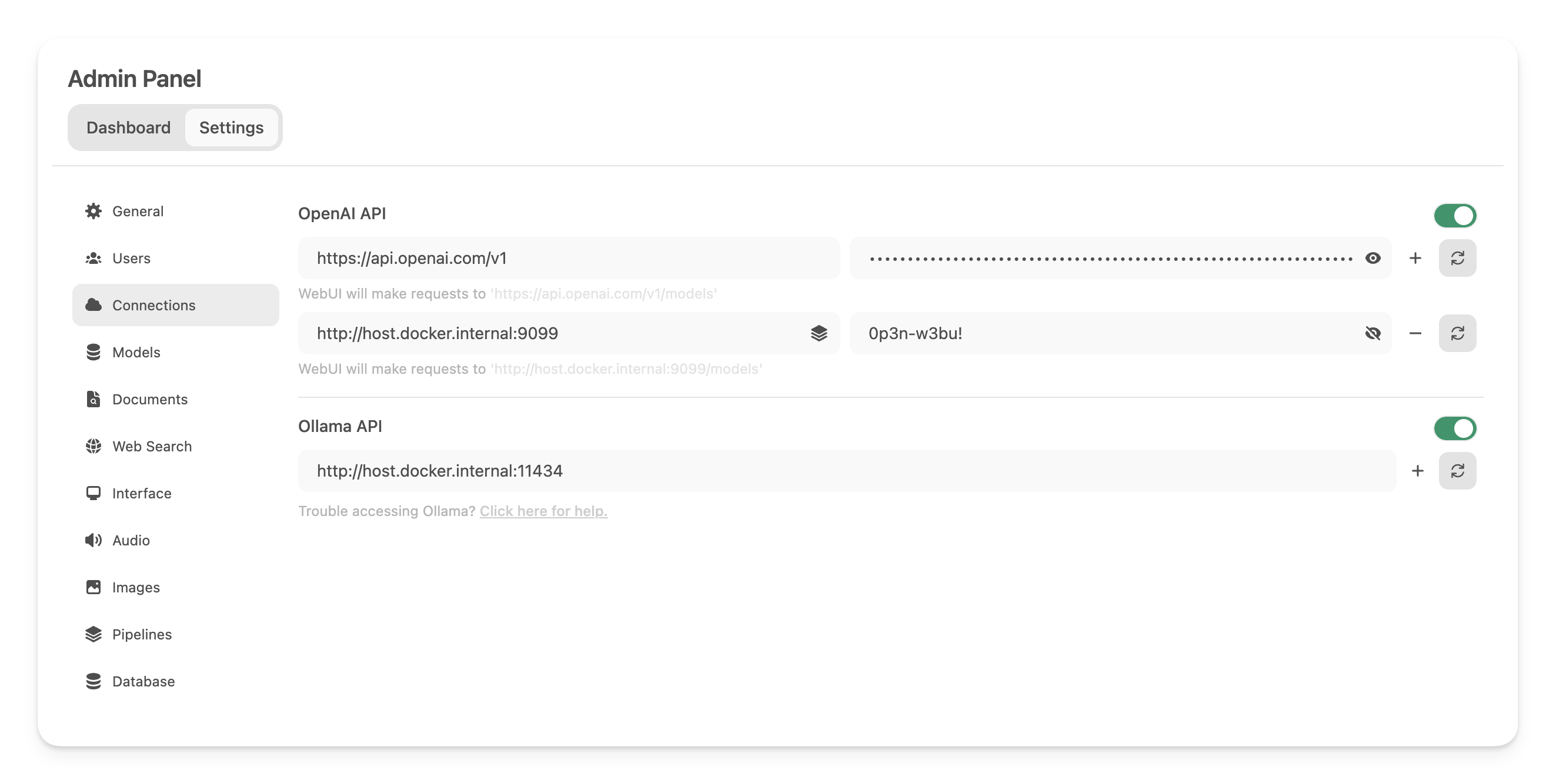

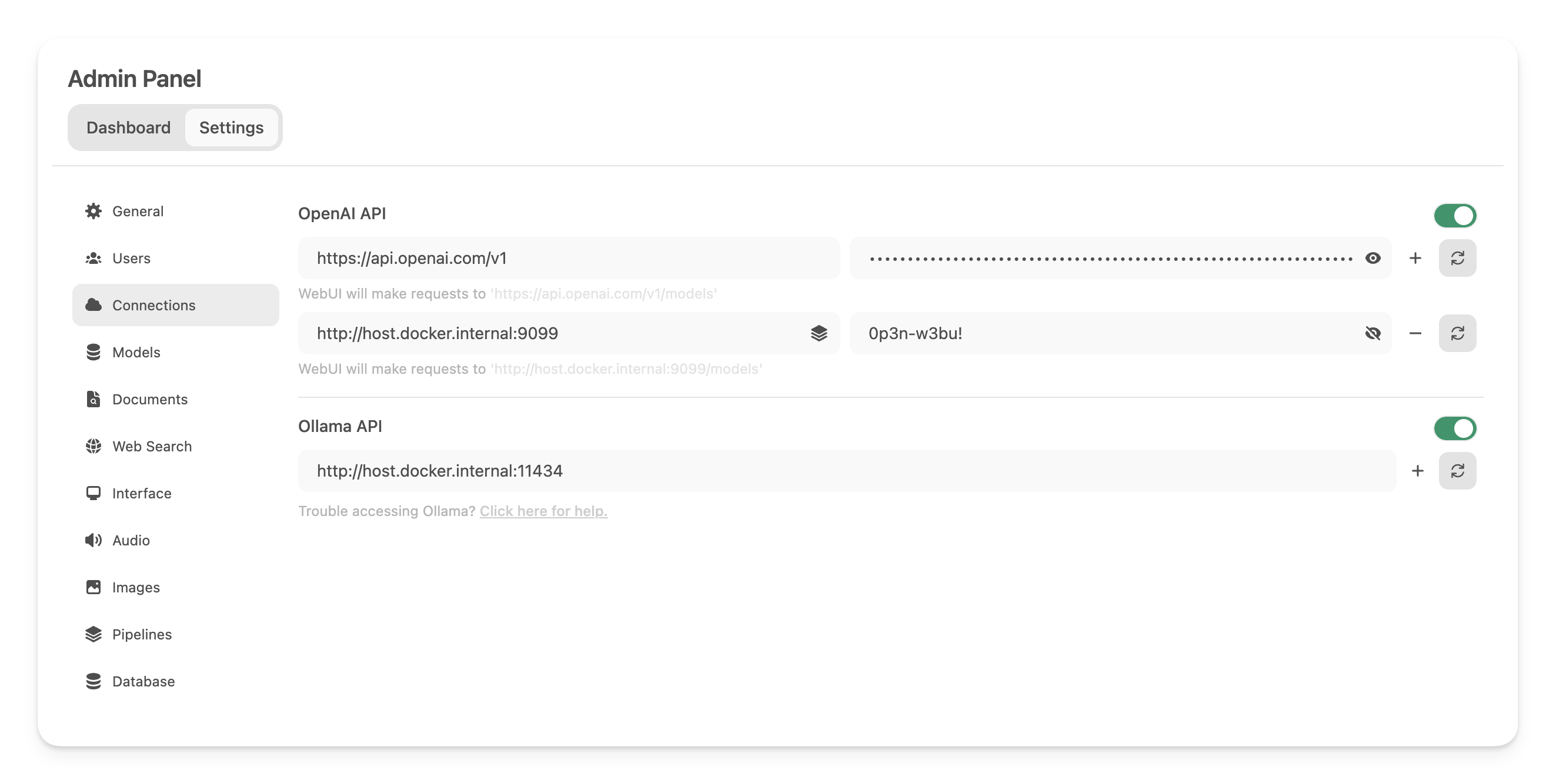

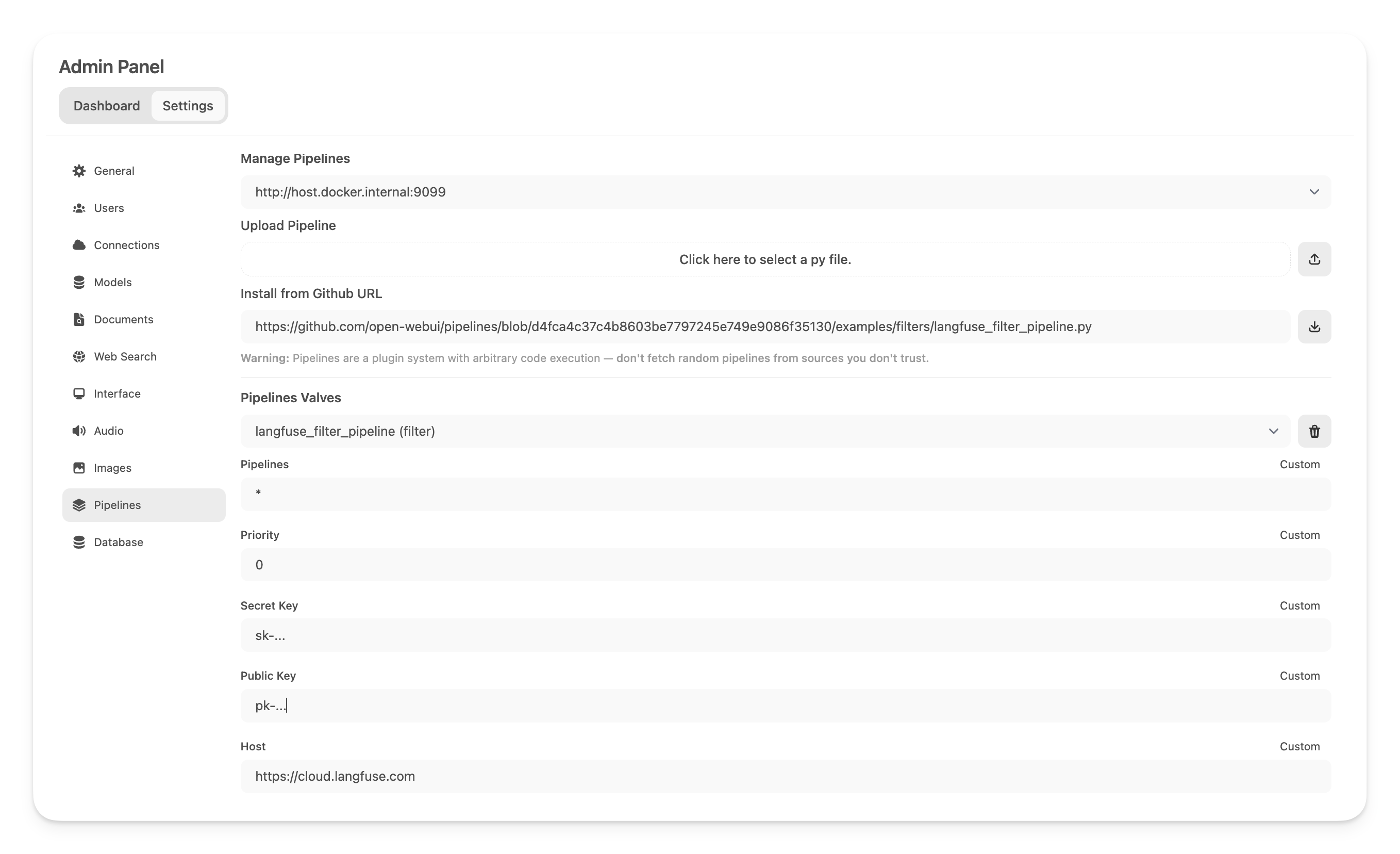

In the _Admin Settings_, create and save a new connection of type OpenAI API with the following details:

|

||||

|

||||

- **URL:** http://host.docker.internal:9099 (this is where the previously launched Docker container is running).

|

||||

- **Password:** 0p3n-w3bu! (standard password)

|

||||

|

||||

|

||||

|

||||

|

||||

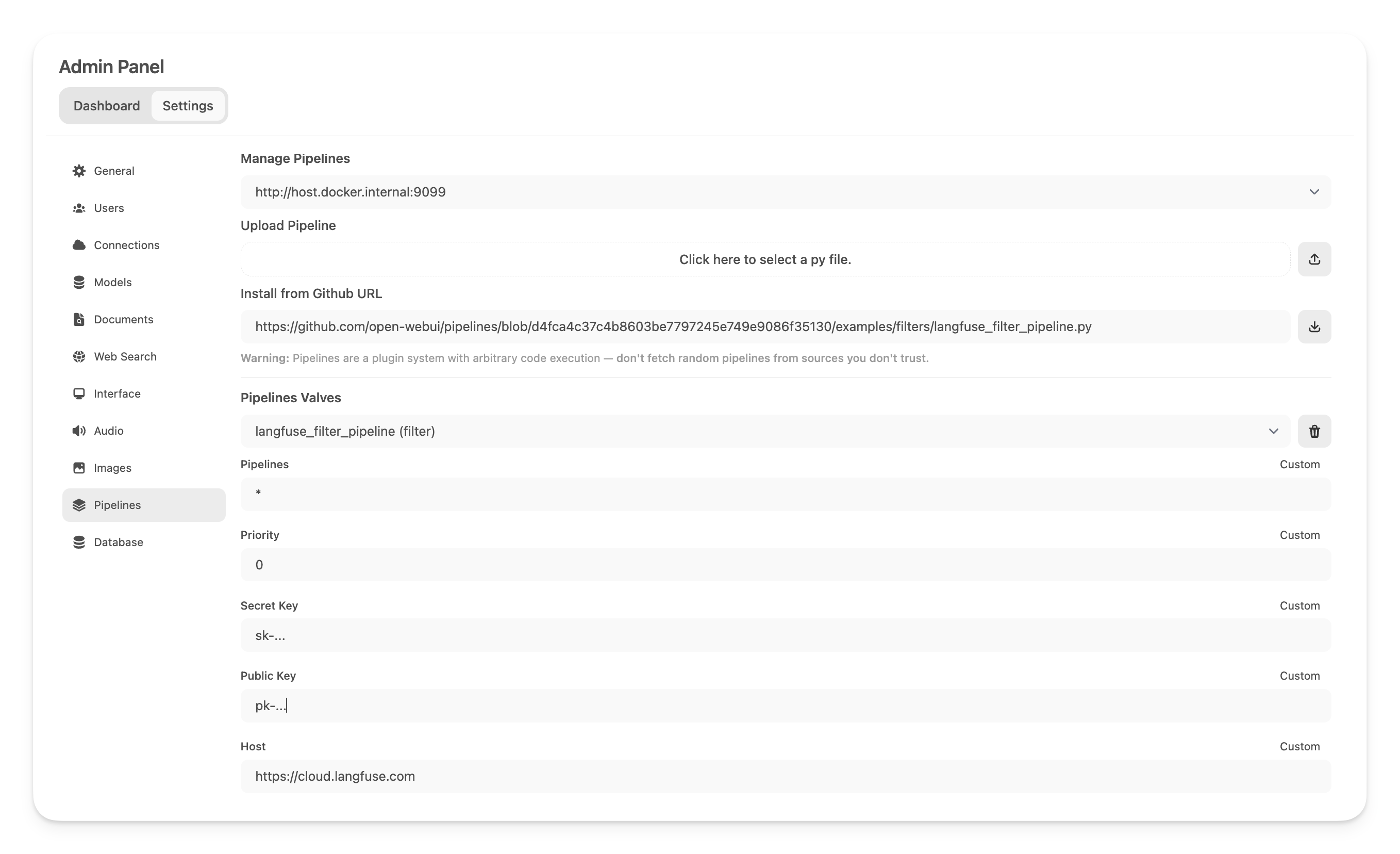

### Step 4: Adding the Langfuse Filter Pipeline

|

||||

|

||||

@ -55,18 +55,18 @@ https://github.com/open-webui/pipelines/blob/main/examples/filters/langfuse_filt

|

||||

|

||||

Now, add your Langfuse API keys below. If you haven't signed up to Langfuse yet, you can get your API keys by creating an account [here](https://cloud.langfuse.com).

|

||||

|

||||

|

||||

|

||||

|

||||

_**Note:** Capture usage (token counts) for OpenAi models while streaming is enabled, you have to navigate to the model settings in OpenWebUI and check the "Usage" [box](https://github.com/open-webui/open-webui/discussions/5770#discussioncomment-10778586) below _Capabilities_._

|

||||

_**Note:** Capture usage (token counts) for OpenAi models while streaming is enabled, you have to navigate to the model settings in Open WebUI and check the "Usage" [box](https://github.com/open-webui/open-webui/discussions/5770#discussioncomment-10778586) below _Capabilities_._

|

||||

|

||||

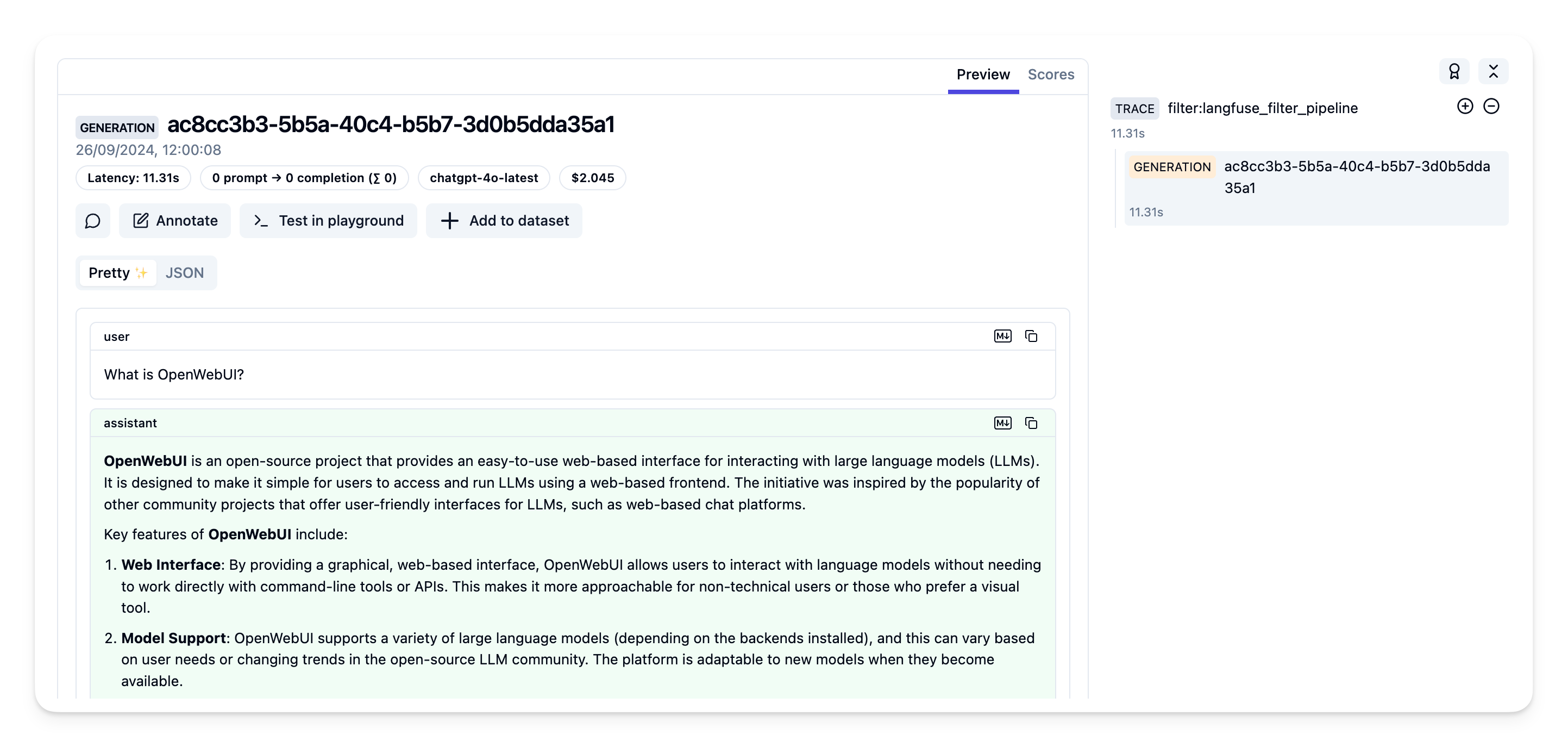

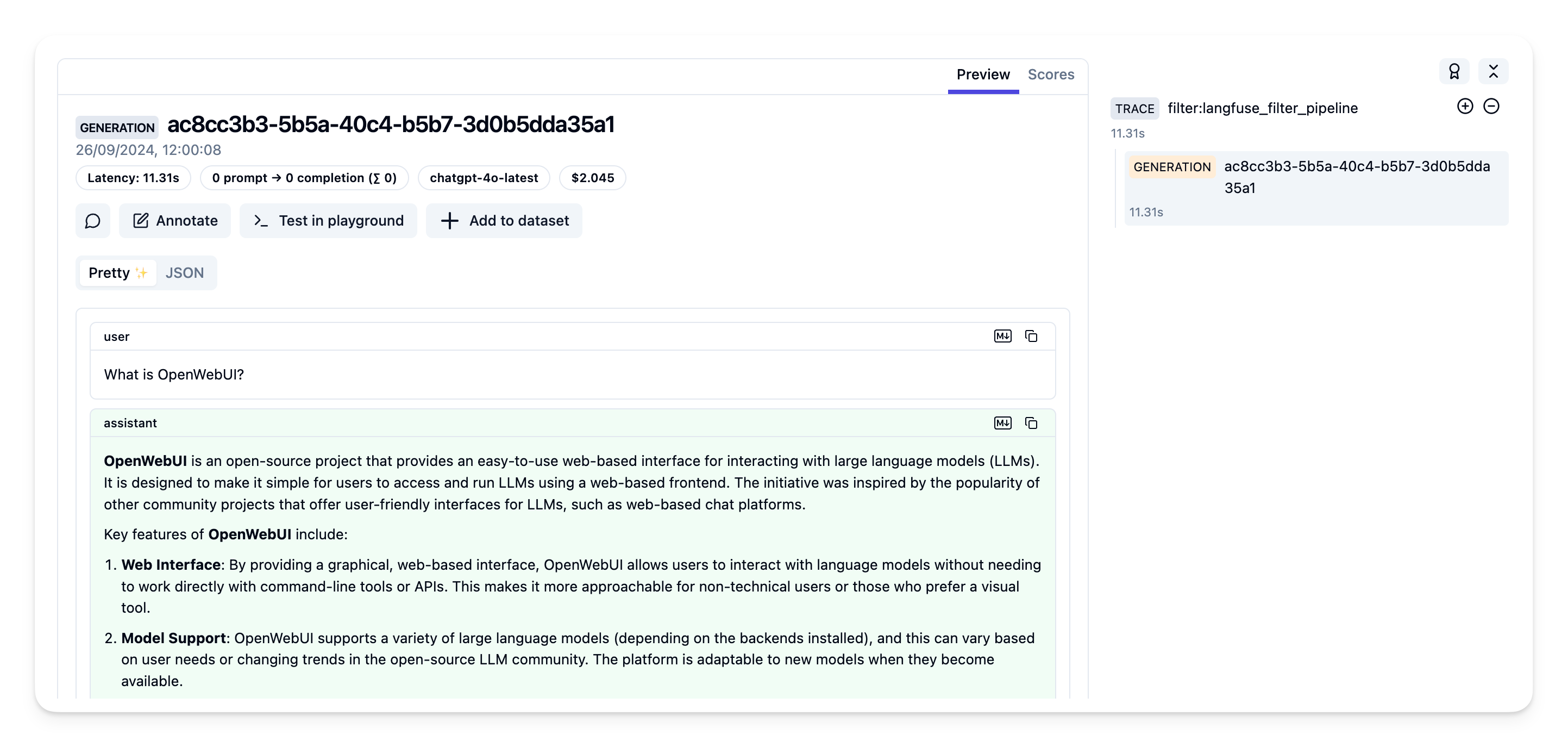

### Step 5: See your traces in Langfuse

|

||||

|

||||

You can now interact with your OpenWebUI application and see the traces in Langfuse.

|

||||

You can now interact with your Open WebUI application and see the traces in Langfuse.

|

||||

|

||||

[Example trace](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/904a8c1f-4974-4f8f-8a2f-129ae78d99c5?observation=fe5b127b-e71c-45ab-8ee5-439d4c0edc28) in the Langfuse UI:

|

||||

|

||||

|

||||

|

||||

|

||||

## Learn more

|

||||

|

||||

For a comprehensive guide on OpenWebUI Pipelines, visit [this post](https://ikasten.io/2024/06/03/getting-started-with-openwebui-pipelines/).

|

||||

For a comprehensive guide on Open WebUI Pipelines, visit [this post](https://ikasten.io/2024/06/03/getting-started-with-openwebui-pipelines/).

|

||||

|

||||

@ -4,7 +4,7 @@ title: "🔠 LibreTranslate Integration"

|

||||

---

|

||||

|

||||

:::warning

|

||||

This tutorial is a community contribution and is not supported by the OpenWebUI team. It serves only as a demonstration on how to customize OpenWebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

:::

|

||||

|

||||

Overview

|

||||

|

||||

@ -11,11 +11,11 @@ This tutorial is a community contribution and is not supported by the Open WebUI

|

||||

|

||||

Nobody likes losing data!

|

||||

|

||||

If you're self-hosting OpenWebUI, then you may wish to institute some kind of formal backup plan in order to ensure that you retain a second and third copy of parts of your configuration.

|

||||

If you're self-hosting Open WebUI, then you may wish to institute some kind of formal backup plan in order to ensure that you retain a second and third copy of parts of your configuration.

|

||||

|

||||

This guide is intended to recommend some basic recommendations for how users might go about doing that.

|

||||

|

||||

This guide assumes that the user has installed OpenWebUI via Docker (or intends to do so)

|

||||

This guide assumes that the user has installed Open WebUI via Docker (or intends to do so)

|

||||

|

||||

## Ensuring data persistence

|

||||

|

||||

@ -27,7 +27,7 @@ Docker containers are ephemeral and data must be persisted to ensure its surviva

|

||||

|

||||

If you're using the Docker Compose from the project repository, you will be deploying Open Web UI using Docker volumes.

|

||||

|

||||

For Ollama and OpenWebUI the mounts are:

|

||||

For Ollama and Open WebUI the mounts are:

|

||||

|

||||

```yaml

|

||||

ollama:

|

||||

@ -349,7 +349,7 @@ In addition to scripting your own backup jobs, you can find commercial offerings

|

||||

|

||||

# Host Level Backups

|

||||

|

||||

Your OpenWebUI instance might be provisioned on a host (physical or virtualised) which you control.

|

||||

Your Open WebUI instance might be provisioned on a host (physical or virtualised) which you control.

|

||||

|

||||

Host level backups involve creating snapshots or backups but of the entire VM rather than running applications.

|

||||

|

||||

@ -388,4 +388,4 @@ In the interest of keeping this guide reasonably thorough these additional subje

|

||||

| Encryption | Modify backup scripts to incorporate encryption at rest for enhanced security. |

|

||||

| Disaster Recovery and Testing | Develop a disaster recovery plan and regularly test the backup and restore process. |

|

||||

| Alternative Backup Tools | Explore other command-line backup tools like `borgbackup` or `restic` for advanced features. |

|

||||

| Email Notifications and Webhooks | Implement email notifications or webhooks to monitor backup success or failure. |

|

||||

| Email Notifications and Webhooks | Implement email notifications or webhooks to monitor backup success or failure. |

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

### Using a Self-Signed Certificate and Nginx on Windows without Docker

|

||||

|

||||

For basic internal/development installations, you can use nginx and a self-signed certificate to proxy openwebui to https, allowing use of features such as microphone input over LAN. (By default, most browsers will not allow microphone input on insecure non-localhost urls)

|

||||

For basic internal/development installations, you can use nginx and a self-signed certificate to proxy Open WebUI to https, allowing use of features such as microphone input over LAN. (By default, most browsers will not allow microphone input on insecure non-localhost urls)

|

||||

|

||||

This guide assumes you installed openwebui using pip and are running `open-webui serve`

|

||||

This guide assumes you installed Open WebUI using pip and are running `open-webui serve`

|

||||

|

||||

#### Step 1: Installing openssl for certificate generation

|

||||

|

||||

@ -46,7 +46,7 @@ Move the generated nginx.key and nginx.crt files to a folder of your choice, or

|

||||

|

||||

Open C:\nginx\conf\nginx.conf in a text editor

|

||||

|

||||

If you want openwebui to be accessible over your local LAN, be sure to note your LAN ip address using `ipconfig` e.g. 192.168.1.15

|

||||

If you want Open WebUI to be accessible over your local LAN, be sure to note your LAN ip address using `ipconfig` e.g. 192.168.1.15

|

||||

|

||||

Set it up as follows:

|

||||

|

||||

@ -145,4 +145,4 @@ Run nginx by running `nginx`. If an nginx service is already started, you can re

|

||||

|

||||

---

|

||||

|

||||

You should now be able to access openwebui on https://192.168.1.15 (or your own LAN ip as appropriate). Be sure to allow windows firewall access as needed.

|

||||

You should now be able to access Open WebUI on https://192.168.1.15 (or your own LAN ip as appropriate). Be sure to allow windows firewall access as needed.

|

||||

|

||||

@ -98,7 +98,7 @@ Community-contributed tutorials must include the the following:

|

||||

|

||||

```

|

||||

:::warning

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize OpenWebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

This tutorial is a community contribution and is not supported by the Open WebUI team. It serves only as a demonstration on how to customize Open WebUI for your specific use case. Want to contribute? Check out the contributing tutorial.

|

||||

:::

|

||||

```

|

||||

|

||||

|

||||

Loading…

Reference in New Issue

Block a user