| configs | ||

| datasets | ||

| figures | ||

| mathlib4@2f65ba7f1a | ||

| prover | ||

| .gitignore | ||

| .gitmodules | ||

| LICENSE-CODE | ||

| LICENSE-MODEL | ||

| paper.pdf | ||

| quick_start.py | ||

| README.md | ||

| requirements.txt | ||

Evaluation Results | Model Download | Setup Environment | Quick Start | Questions and Bugs | License | Citation | Contact

DeepSeek-Prover-V1.5: Harnessing Proof Assistant Feedback for Reinforcement Learning and Monte-Carlo Tree Search

1. Introduction

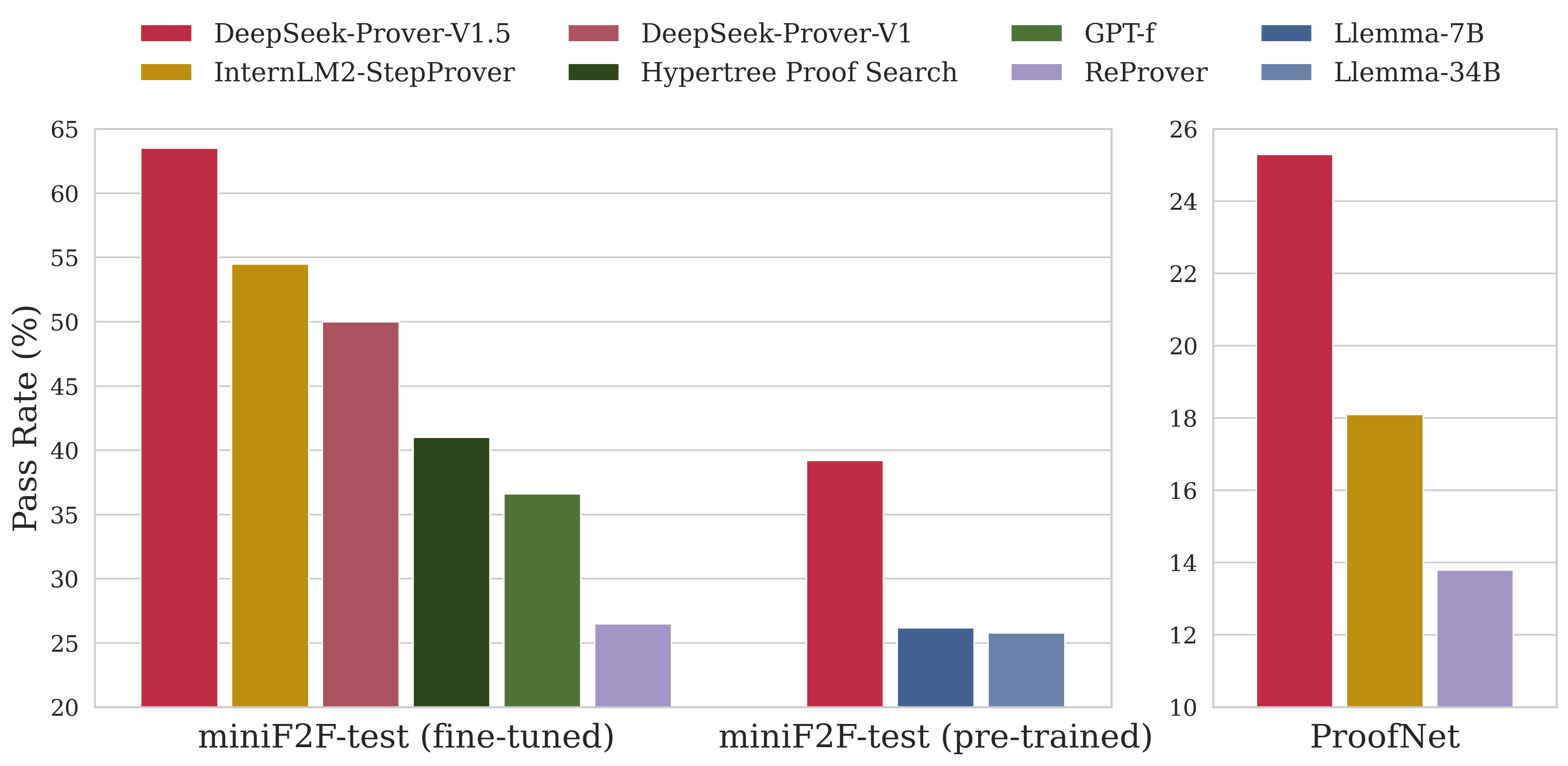

We introduce DeepSeek-Prover-V1.5, an open-source language model designed for theorem proving in Lean 4, which enhances DeepSeek-Prover-V1 by optimizing both training and inference processes. Pre-trained on DeepSeekMath-Base with specialization in formal mathematical languages, the model undergoes supervised fine-tuning using an enhanced formal theorem proving dataset derived from DeepSeek-Prover-V1. Further refinement is achieved through reinforcement learning from proof assistant feedback (RLPAF). Beyond the single-pass whole-proof generation approach of DeepSeek-Prover-V1, we propose RMaxTS, a variant of Monte-Carlo tree search that employs an intrinsic-reward-driven exploration strategy to generate diverse proof paths. DeepSeek-Prover-V1.5 demonstrates significant improvements over DeepSeek-Prover-V1, achieving new state-of-the-art results on the test set of the high school level miniF2F benchmark (63.5%) and the undergraduate level ProofNet benchmark (25.3%).

2. Evaluation Results

| miniF2F-test | ProofNet | |

|---|---|---|

| ReProver | 26.5% | 13.8% |

| GPT-f | 36.6% | - |

| Hypertree Proof Search | 41.0% | - |

| InternLM2-StepProver | 54.5% | 18.1% |

| DeepSeek-Prover-V1 | 50.0% | - |

| DeepSeek-Prover-V1.5-Base | 42.2% | 13.2% |

| DeepSeek-Prover-V1.5-SFT | 57.4% | 22.9% |

| DeepSeek-Prover-V1.5-RL | 60.2% | 22.6% |

| DeepSeek-Prover-V1.5-RL + RMaxTS | 63.5% | 25.3% |

3. Model Downloads

We release the DeepSeek-Prover-V1.5 with 7B parameters, including base, SFT and RL models, to the public.

| Model | Download |

|---|---|

| DeepSeek-Prover-V1.5-Base | 🤗 HuggingFace |

| DeepSeek-Prover-V1.5-SFT | 🤗 HuggingFace |

| DeepSeek-Prover-V1.5-RL | 🤗 HuggingFace |

4. Setup Environment

Requirements

- Supported platform: Linux

- Python 3.10

Installation

-

Install Lean 4

Follow the instructions on the Lean 4 installation page to set up Lean 4.

-

Clone the repository

git clone --recurse-submodules git@github.com:deepseek-ai/DeepSeek-Prover-V1.5.git

cd DeepSeek-Prover-V1.5

- Install dependencies

pip install -r requirements.txt

- Build Mathlib4

cd mathlib4

lake build

5. Quick Start

You can directly use Huggingface's Transformers for model inference. A simple example of generating a proof for a problem from miniF2F and verifying it can be found in quick_start.py.

To run paper experiments, you can use the following script to launch a RMaxTS proof search agent:

python -m prover.launch --config=configs/RMaxTS.py --log_dir=logs/RMaxTS_results

You can use CUDA_VISIBLE_DEVICES=0,1,··· to specify the GPU devices. The experiment results can be gathered using the following script:

python -m prover.summarize --config=configs/RMaxTS.py --log_dir=logs/RMaxTS_results

6. Questions and Bugs

- For general questions and discussions, please use GitHub Discussions.

- To report a potential bug, please open an issue.

7. License

This code repository is licensed under the MIT License. The use of DeepSeekMath models is subject to the Model License. DeepSeekMath supports commercial use.

See the LICENSE-CODE and LICENSE-MODEL for more details.

8. Citation

@article{xin2024deepseekproverv15harnessingproofassistant,

title={DeepSeek-Prover-V1.5: Harnessing Proof Assistant Feedback for Reinforcement Learning and Monte-Carlo Tree Search},

author={Huajian Xin and Z. Z. Ren and Junxiao Song and Zhihong Shao and Wanjia Zhao and Haocheng Wang and Bo Liu and Liyue Zhang and Xuan Lu and Qiushi Du and Wenjun Gao and Qihao Zhu and Dejian Yang and Zhibin Gou and Z. F. Wu and Fuli Luo and Chong Ruan},

year={2024},

eprint={2408.08152},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2408.08152},

}

9. Contact

If you have any questions, please raise an issue or contact us at service@deepseek.com.